Vision-Based Autonomous Driving System (ViBADS)

Overview

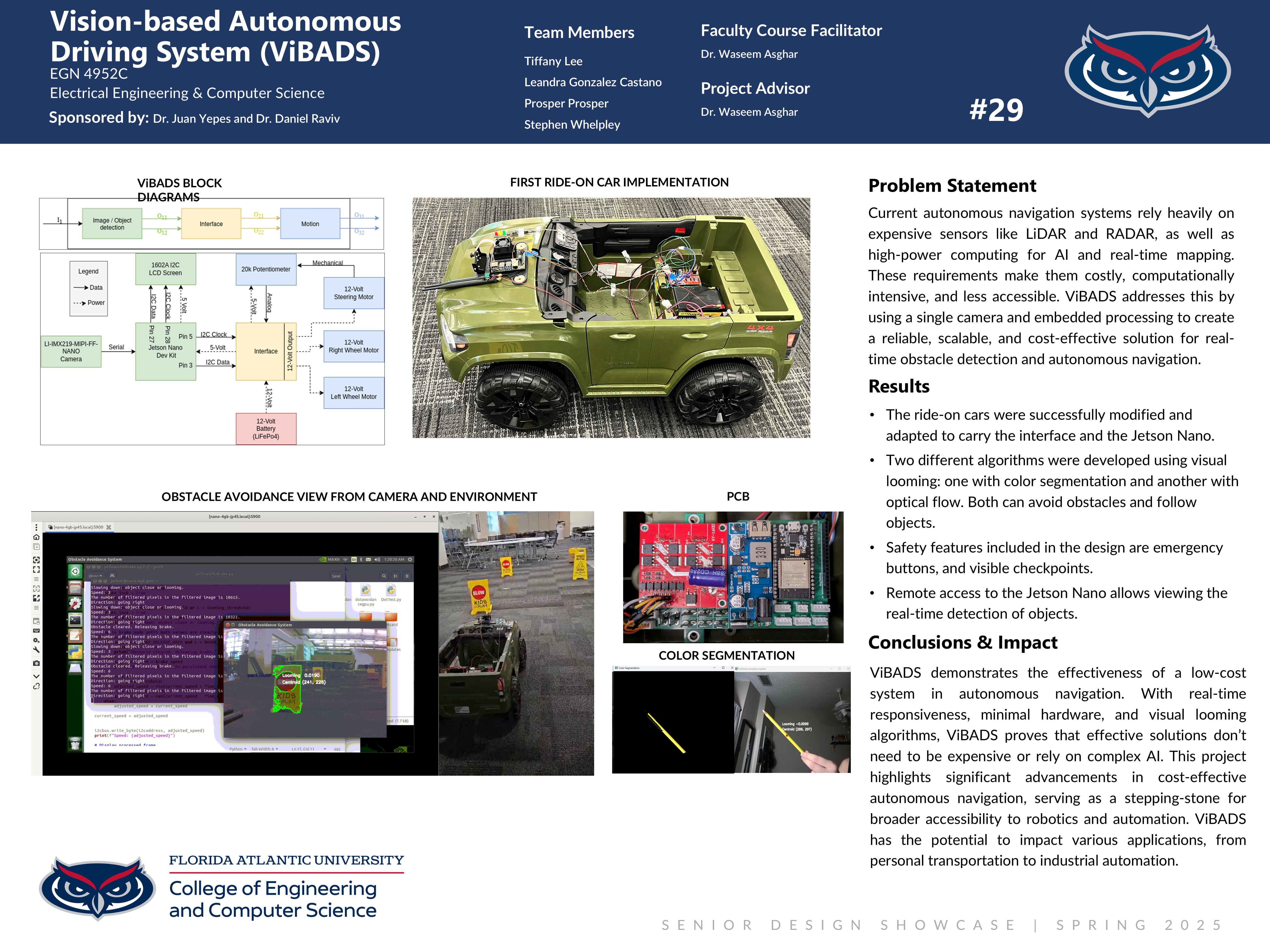

The Vision-Based Autonomous Driving System (ViBADS) is a low-cost, visually guided autonomous navigation solution designed for ride-on vehicles. Instead of relying on expensive sensors like LiDAR or complex AI models, ViBADS uses a single forward-facing camera and visual looming algorithms to detect obstacles and adjust steering and speed in real-time. Developed using the Jetson Nano and ESP32, the system processes visual data to drive safely through dynamic environments—like the FAU breezeway—without human input. ViBADS is built to be affordable, scalable, and efficient, making it a promising solution for real-world autonomous mobility applications.

Community Benefit

ViBADS provides a practical step forward in making autonomous vehicle technology more accessible to communities, schools, and research institutions with limited resources. By eliminating the need for costly sensors and high-powered computing, this project demonstrates that safe, real-time navigation can be achieved affordably.

Team Members

Sponsored By

Dr. Juan Yepes and Dr. Daniel Raviv