Projects

Machine-to-Machine Coding Standards

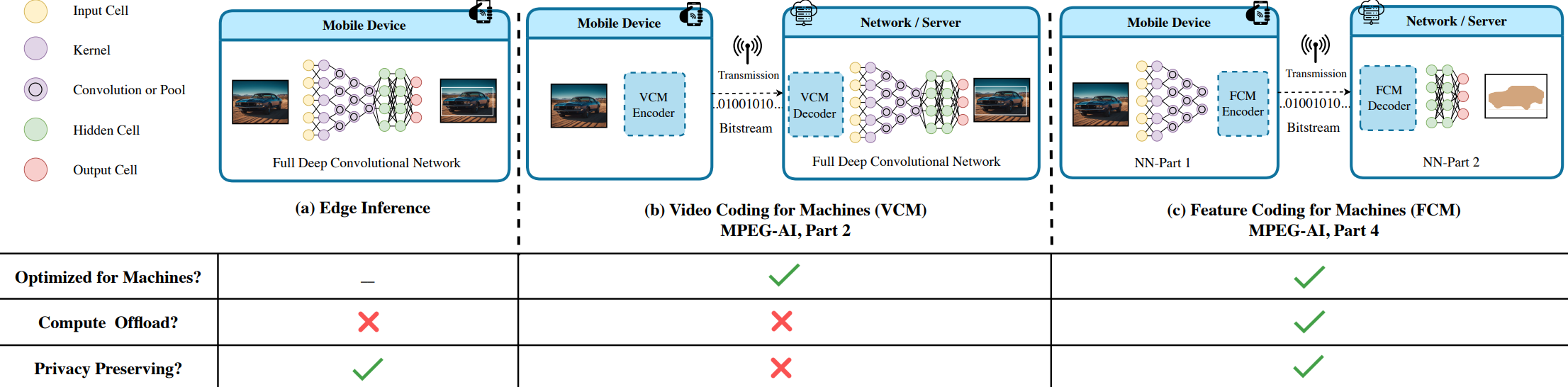

Machines are increasingly becoming the primary consumers of visual data, yet most deployments of machine-to-machine systems still rely on remote inference where pixel-based video is streamed using codecs optimized for human perception. Consequently, this paradigm is bandwidth intensive, scales poorly, and exposes raw images to third parties. Recent efforts in the Moving Picture Experts Group (MPEG) redesigned the pipeline for machine-to-machine communication: Video Coding for Machines (VCM) is designed to apply task-aware coding tools in the pixel domain, and Feature Coding for Machines (FCM) is designed to compress intermediate neural features to reduce bitrate, preserve privacy, and support compute offload.

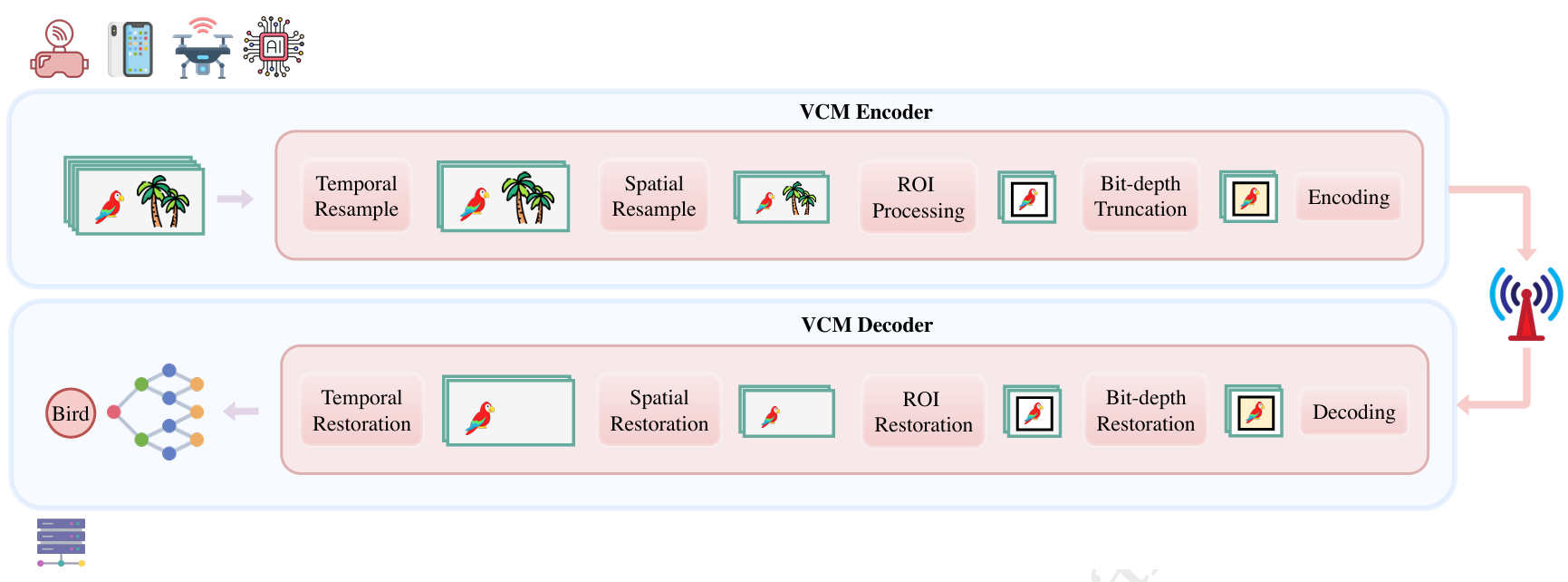

Video Coding for Machines (VCM)

Traditionally, compression techniques have been optimized for visual quality tailored to human consumption, leveraging the characteristics of the human visual system (HVS) to balance quality and efficiency. For instance, Joint Photographic Experts Group (JPEG) employs pre-defined quantization table in JPEG standard for images to discard frequency components less noticeable to human vision, achieving compression while maintaining perceptual quality. However, the rise of artificial intelligence (AI) and the growth of machine-to-machine (M2M) communication have shifted the focus of compression from human-centric optimization to machine-oriented processing. As automated systems become increasingly prevalent, where content is analyzed and acted upon without human intervention, developing compression techniques optimized for machine vision tasks has become an urgent and critical research area. Video Coding for Machines (VCM) provide one such solution where compression does not prioritize preserving visually pleasing details but focuses instead on retaining features essential for specific machine tasks.

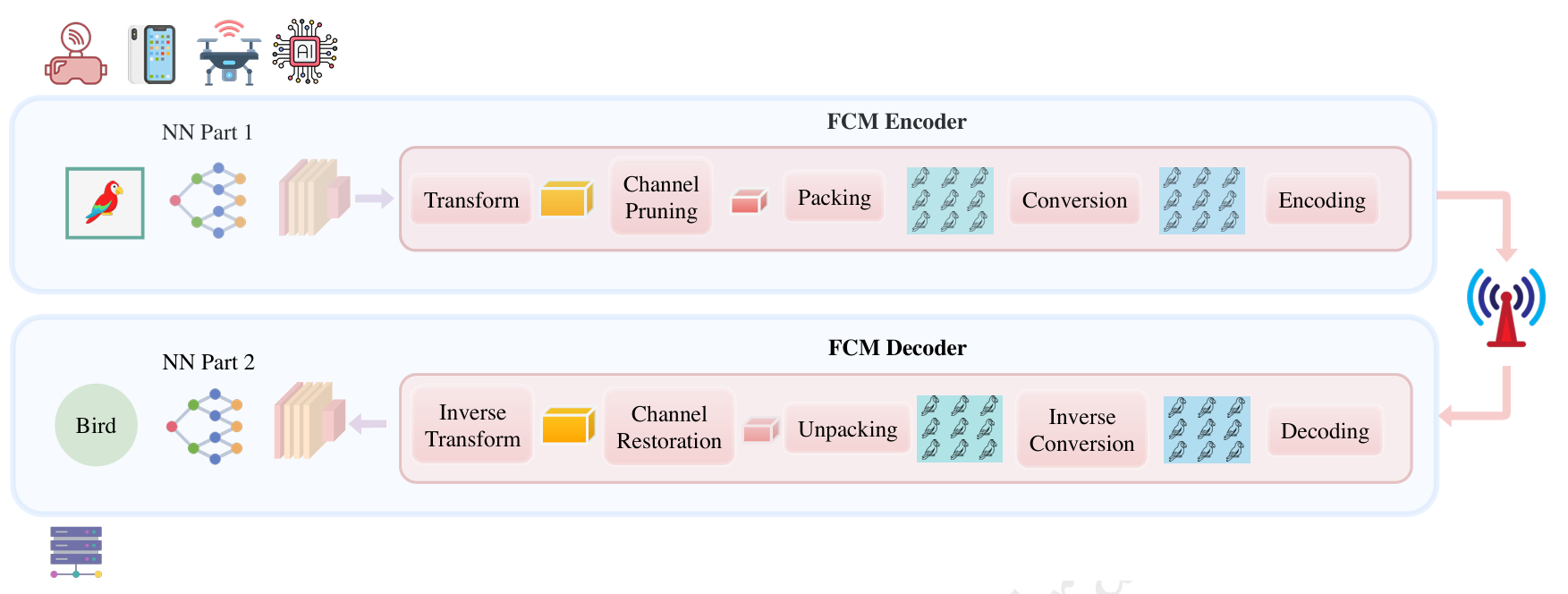

Feature Coding for Machines (FCM)

In Feature Coding for Machines (FCM), a neural network is partitioned such that early layers are executed on the edge device, generating intermediate features. These features are then transmitted to the cloud, where the remaining computation is performed. This architecture leverages the device’s available compute and enables more efficient load balancing between clients and servers. This solution also offers strong privacy since the intermittent network features are transmitted rather than the actual video. Read more at: Md Eimran Hossain Eimon, Velibor Adzic, Hari Kalva, and Borko Furht. 2026. Emerging Standards for Machine-to-Machine Video Coding In Proceedings of the 5th Mile-High Video Conference (MHV '26). Association for Computing Machinery, New York, NY, USA, 128–134 https://doi.org/10.1145/3789239.3793282.

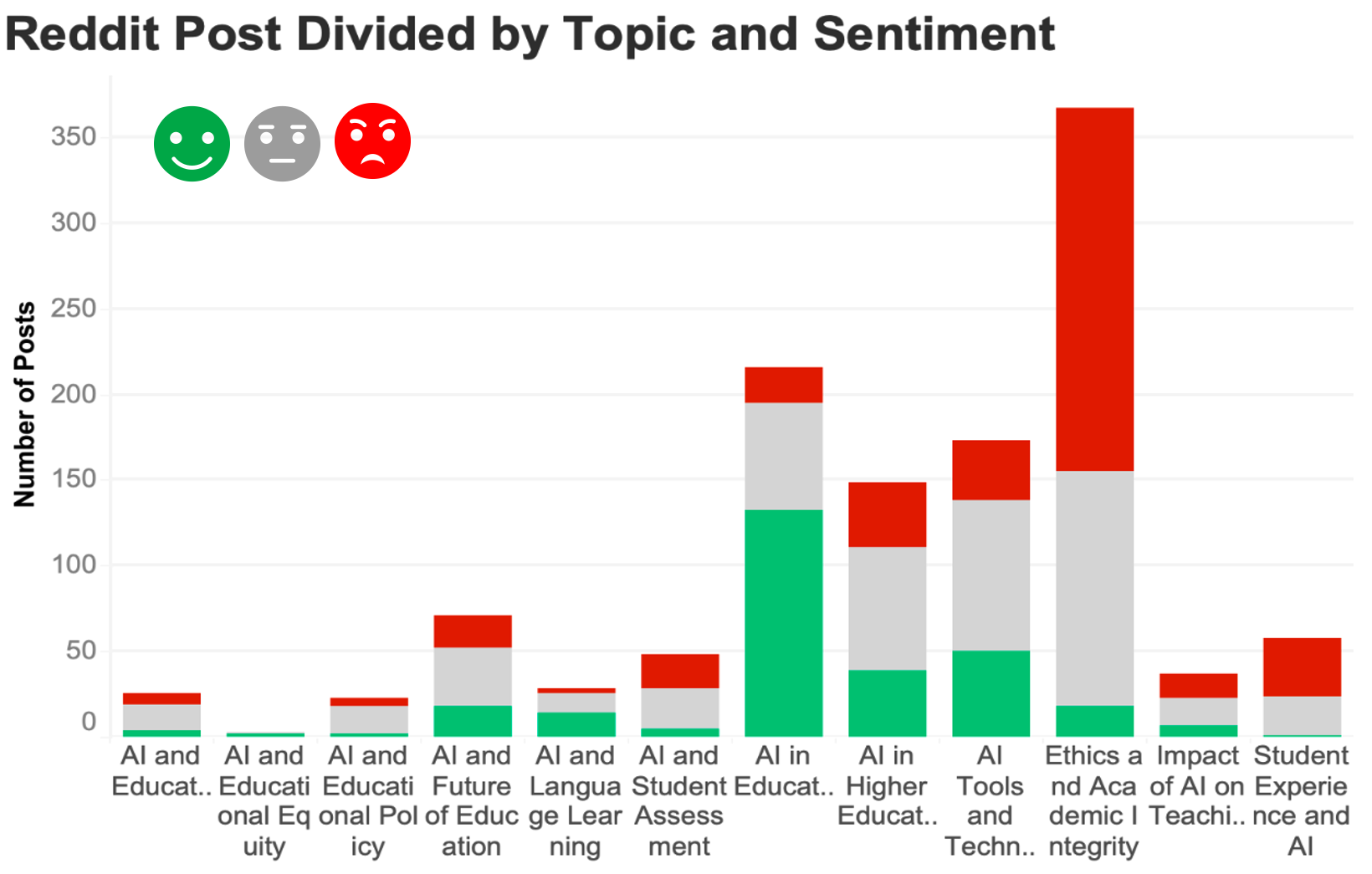

Integrating AI in Education Using Social Media Analysis

Generative AI (GAI) tools such as ChatGPT, Copilot, Gemini, and Claude have drastically reshaped education. GAI tools have impressive capabilities in areas like personalized learning, grading, language translation, and content creation. However, there are also concerns about negative effects on learning outcomes, including over-reliance on AI tools. Using social media data related to GAI tools in education, this study aims to equip stakeholders with understanding of attitudes towards this technology and data-driven recommendations on potential next steps in K-12 and higher education. We have found that while students and educators generally have a positive view of personalized learning with GAI technology, personal accounts from students and educators also reveal a concerning trend of dependency on fundamentally flawed AI detectors to assess student work. Moving forward, addressing this pressing issue should be top priority. Educational alternatives, such as in-class knowledge assessments or integrating AI into the learning process, should be urgently considered. Extensions based on the key findings of this study are currently being explored.

AI-Powered Smart Cameras for Advanced Wildlife Monitoring

The demand for a reliable, affordable camera trap designed for conservation biology, especially for tracking meso- and megaherbivores, is met by our development of a Smart Wildlife Camera with Artificial Intelligence and Machine Learning. The pricey, feature-rich commercial alternatives available today are subject to false triggers from environmental factors such as movement of vegetation. In addition, they frequently sustain damage in challenging field circumstances and encounter configuration issues during extensive deployments. With Artificial Intelligence and Machine Learning capabilities, this project offers a solid, low-cost design that can minimize false triggers, enhance usability, and save operational expenses. To simplify setup and lower human error, the design also accommodates batch configuration of software program where it handles the unique requirements of biodiversity monitoring and maintaining durability in harsh conditions.

Security & Privacy Issues in CV and DL

Adversarial attacks have emerged as a pivotal area of research in contemporary machine learning. This work is dedicated to understanding and developing strategies to mitigate these attacks, which involve crafting malicious inputs to deceive AI systems. The focus lies on evaluating and exposing vulnerabilities in deep learning models, particularly those employed in computer vision tasks. The research emphasizes both black-box and white-box adversarial attacks, devising methods that not only highlight the susceptibility of current models but also propose robust defense mechanisms. Experimental findings provide significant insights into enhancing model resilience, offering practical solutions for critical applications such as healthcare, autonomous systems, and cybersecurity. By addressing these pressing challenges, this research contributes to the development of safer and more reliable AI systems, advancing the field toward greater trustworthiness and robustness in deep learning and computer vision.