Emotion Recognition

Slide-1

FAU REU 2017 SUMMER PROJECT

JACOB BELGA

EMMANUEL DAMOUR

Slide-2

INTRODUCTION: JACOB BELGA

- Currently enrolled at FAU High

- Pursuing a degree in Computer Science

- Working with Dr. Hallstrom this Summer

Slide-3

INTRODUCTION: EMMANUEL DAMOUR

- Currently Enrolled at Georgia State University

- Born in New York

- Raised in Philadelphia

Slide-4

PROJECT: EMOTION RECOGNITION

- Speech Analysis

- Sentiment Analysis

- Tonal Feature Analysis

- Machine Learning

- Multi-layer Perceptron

- Training Data Set

Slide-5

SENTIMENT ANALYSIS

- Analyzes words individually

- Compare words with respect to one another

- Outputs relative positivity, negativity, and neutrality

Slide-6

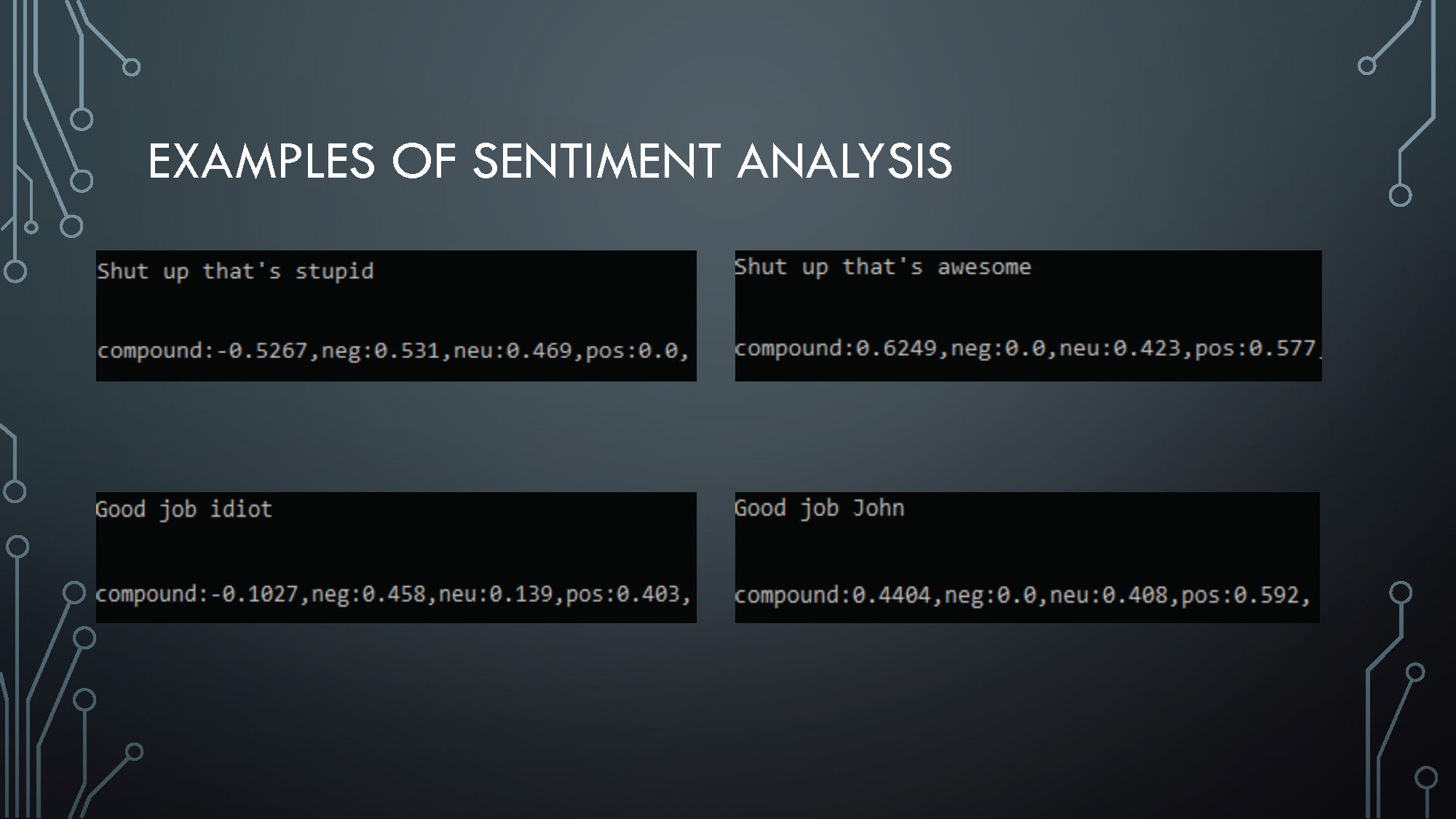

EXAMPLES OF SENTIMENT ANALYSIS

This slide shows examples of how sentiment analysis works on different phrases and sentences to determine emotional content.

Slide-7

TONAL FEATURE ANALYSIS

- Analyzes tonal qualities of speech

- Utilizes Fast Fourier Transform (FFT)

- Outputs array data of amplitude, power, and frequency

Slide-8

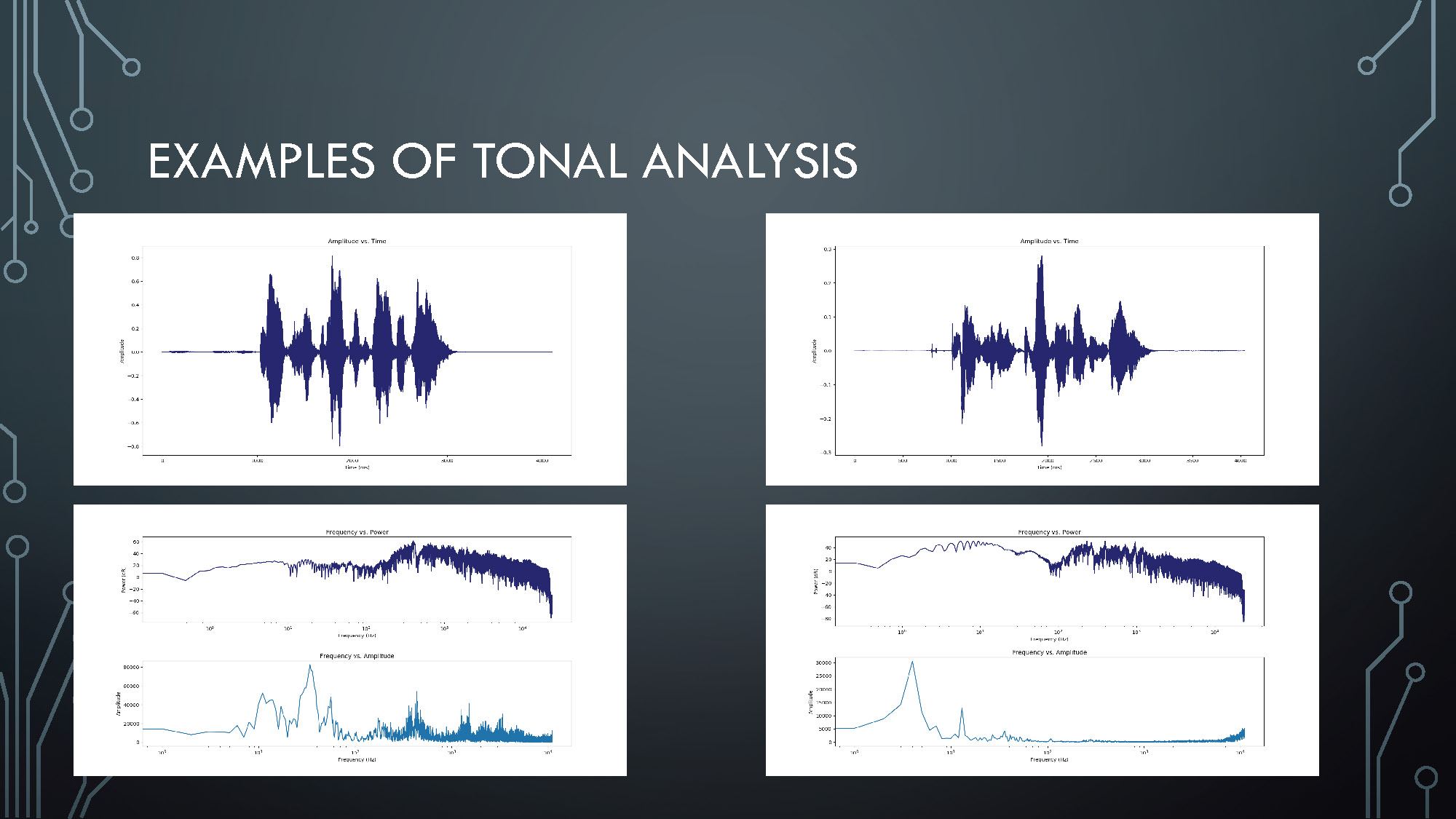

EXAMPLES OF TONAL ANALYSIS

Two graphs are shown demonstrating frequency analysis:

Frequency vs. Amplitude

Graph showing the relationship between frequency (Hz) and amplitude in speech signal analysis.

Additional frequency analysis graph showing tonal features extraction from speech signals.

Slide-9

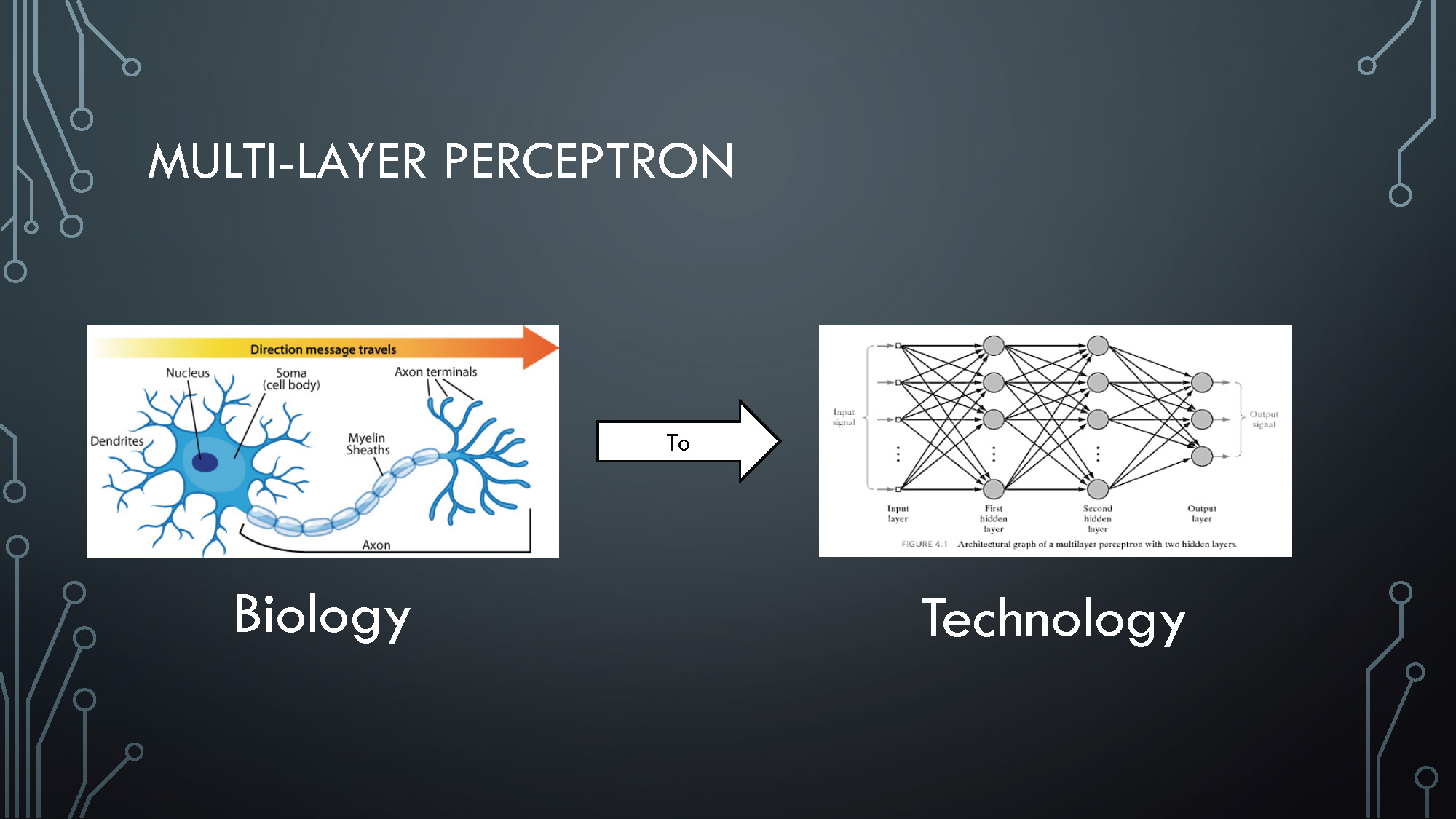

MULTI-LAYER PERCEPTRON

Diagram showing the relationship between:

Biology

Biological neural network structure

Technology

Artificial neural network implementation with multiple layers for machine learning processing

Slide-10

TRAINING DATA SET

- Ryerson University Speech/Song data set

- Focusing on four emotion types:

- Happy

- Sad

- Angry

- Calm

Slide-11

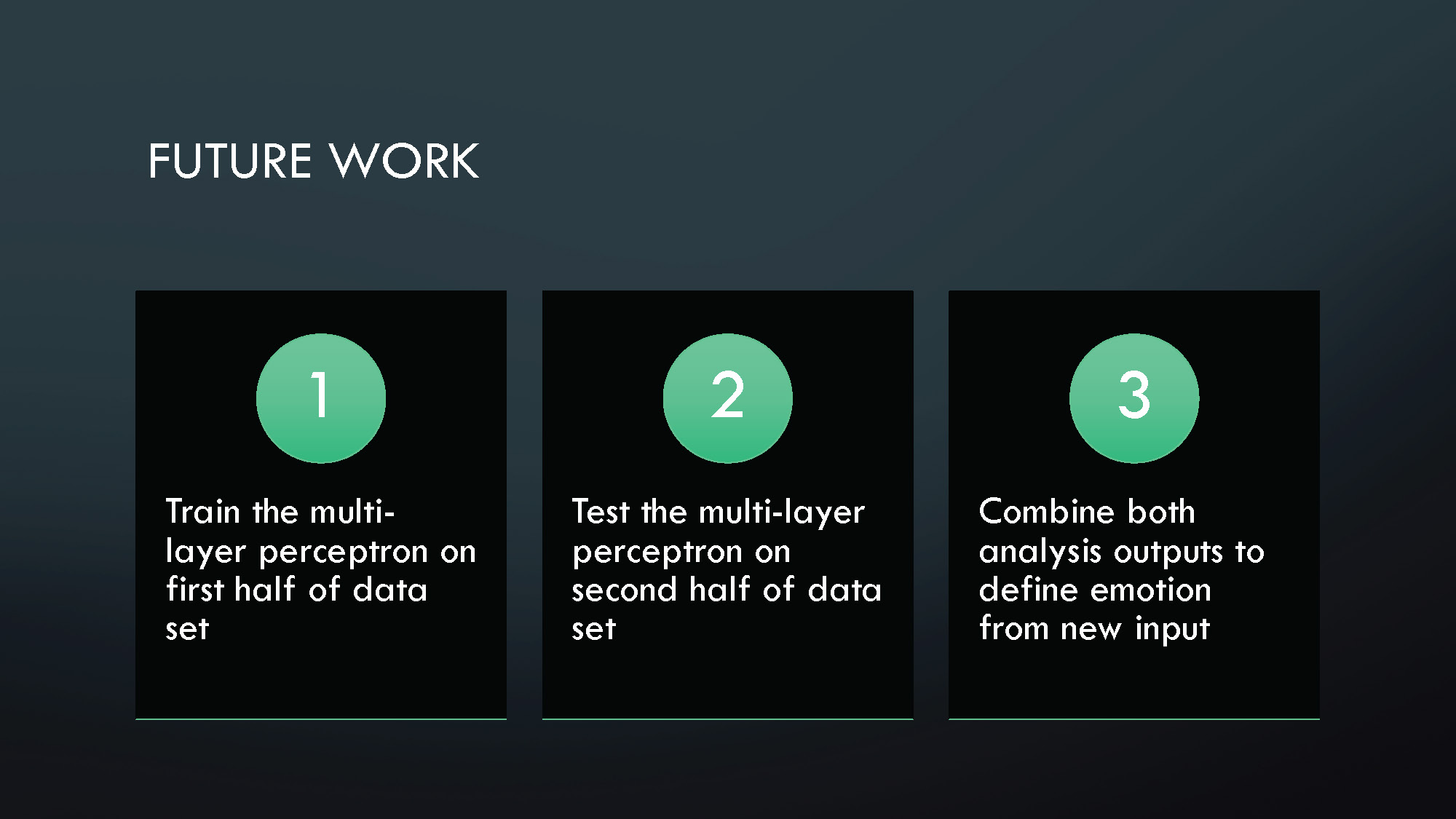

FUTURE WORK

Train the multi-layer perceptron on first half of data set

Test the multi-layer perceptron on second half of data set

Combine both analysis outputs to define emotion from new input

End of Presentation

Click the right arrow to return to the beginning of the slide show.

For a downloadable version of this presentation, email: I-SENSE@FAU.