Gunfire or Plastic Bag Popping? Trained Computer Knows the Difference

Researchers recorded gunshot-like sounds in locations where there was a likelihood of guns being fired, which included an outdoor park.

According to the Gun Violence Archive, there have been 296 mass shootings in the United States this year. Sadly, 2021 is on pace to be America’s deadliest year of gun violence in the last two decades.

Discerning between a dangerous audio event like a gun firing and a non-life-threatening event, such as a plastic bag bursting, can mean the difference between life and death. Additionally, it also can determine whether or not to deploy public safety workers. Humans, as well as computers, often confuse the sounds of a plastic bag popping and real gunshot sounds.

Over the past few years, there has been a degree of hesitation over the implementation of some of the well-known available acoustic gunshot detector systems since they can be costly and often unreliable.

In an experimental study, researchers from Florida Atlantic University’s College of Engineering and Computer Science focused on addressing the reliability of these detection systems as it relates to the false positive rate. The ability of a model to correctly discern sounds, even in the subtlest of scenarios, will differentiate a well-trained model from one that is not very efficient.

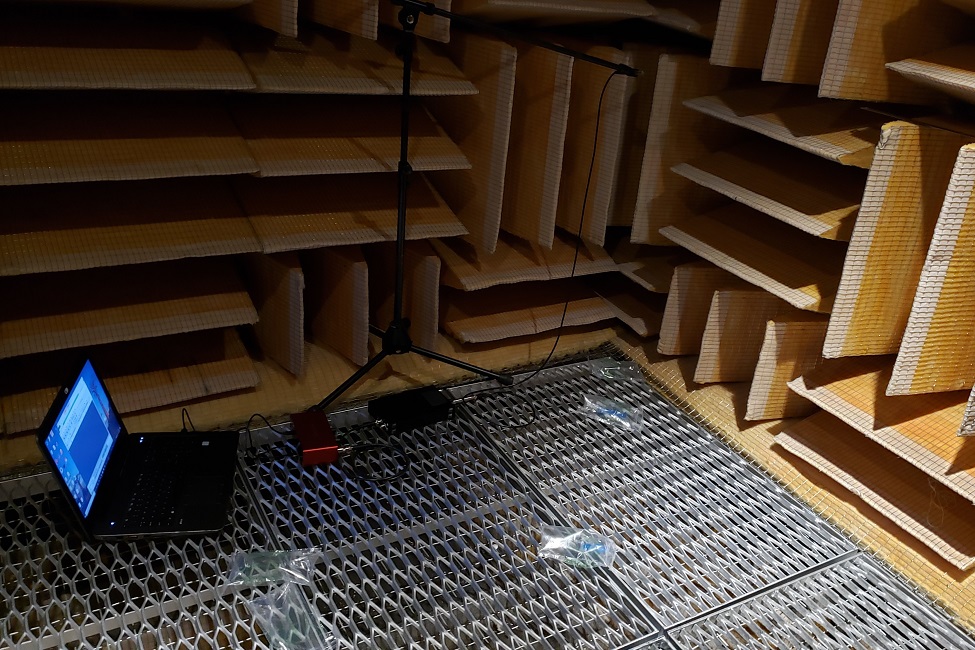

With the daunting task of accounting for all sounds that are similar to a gunshot sound, the researchers created a new dataset comprised of audio recordings of plastic bag explosions collected over a variety of environments and conditions, such as plastic bag size and distance from the recording microphones. Recordings from the audio clips ranged from 400 to 600 milliseconds in duration.

Researchers also developed a classification algorithm based on a convolutional neural network (CNN), as a baseline, to illustrate the relevance of this data collection effort. The data was then used, together with a gunshot sound dataset, to train a classification model based on a CNN to differentiate life-threatening gunshot events from non-life-threatening plastic bag explosion events.

Results of the study, published in the journal Sensors, demonstrate how fake gunshot sounds can easily confuse a gunshot sound detection system. Seventy-five percent of the plastic bag pop sounds were misclassified as gunshot sounds. The deep learning-based classification model trained with a popular urban sound dataset containing gunshot sounds could not distinguish plastic bag pop sounds from gunshot sounds. However, once the plastic bag pop sounds were injected into model training, researchers discovered that the CNN classification model performed well in distinguishing actual gunshot sounds from plastic bag sounds.

“As humans, we use additional sensory inputs and past experiences to identify sounds. Computers, on the other hand, are trained to decipher information that is often irrelevant or imperceptible to human ears,” said Hanqi Zhuang, Ph.D., senior author, professor and chair, Department of Electrical Engineering and Computer Science, College of Engineering and Computer Science. “Similar to how bats swoop around objects as they transmit high-pitched sound waves that will bounce back to them at different time intervals, we used different environments to give the machine learning algorithm a better perception sense of the differentiation of the closely related sounds.”

For the study, gunshot-like sounds were recorded in locations where there was a likelihood of guns being fired, which included a total of eight indoor and outdoor locations. The data collection process started with experimentation of various types of bags, with trash can liners selected as the most suitable. Most of the audio clips were captured using six recording devices. To check on the extent of which a sound classification model could be confused by fake gunshots, researchers trained the model without exposing it to plastic bag pop sounds.

There were 374 gunshot samples initially used to train the model, which were obtained from the urban sound database. Researchers used 10 classes from the database (gun shot, dog barking, children playing, car horn, air conditioner, street music, siren, engine idling, jackhammer, and drilling). After training, the model was then used to test its ability to reject plastic bag pop sounds as true gunshot sounds.

“The high percentage of misclassification indicates that it is very difficult for a classification model to discern gunshot-like sounds such as those from plastic bag pop sounds, and real gunshot sounds,” said Rajesh Baliram Singh, first author and a Ph.D. student in FAU’s Department of Electrical Engineering and Computer Science. “This warrants the process of developing a dataset containing sounds that are similar to real gunshot sounds.”

In gunshot detection, having a database of a particular sound that can be confused with gunshot sound yet is rich in diversity can lead to a more effective gunshot detection system. This concept motivated the researchers to create a database of plastic bag explosion sounds. The higher the diversity of the same sound the higher the likelihood that the machine learning algorithm will correctly detect that specific sound.

“Improving the performance of a gunshot detection algorithm, in particular, to reduce its false positive rate, will reduce the chances of treating innocuous audio trigger events as perilous audio events involving firearms,” said Stella Batalama, Ph.D., dean, College of Engineering and Computers Science. “This dataset developed by our researchers, along with the classification model they trained for gunshot and gunshot-like sounds is an important step leading to much fewer false positives and in improving overall public safety by deploying critical personnel only when necessary.”

Study co-author is Jeet Kiran Pawani, M.S., who conducted the study while at Georgia Tech.

-FAU-

Tags: technology | students | engineering | faculty and staff | research