Data Fusion of 2D Perception Algorithm and 3D Spatial Data for Social Distancing Awareness Application

Slide-1

REU Scholar(s): Emily Portalatín-Méndez, Jennifer Uraga Lopez

REU Project: Artificial Intelligence for Smart Cities

Advisor: Dr. Jinwoo Jang

Home Institution: Lehman College, City University of New York (CUNY)

Slide-2

NSF Engineering Research Center for Smart StreetScapes (CS3)

The Center for Smart Streetscapes (CS3) is a Gen-4 NSF Engineering Research Center based at Columbia University (New York) taking a new approach to smart cities with 80+ stakeholders industry partners, community organizations, municipalities, and K-12 schools – both as collaborative co-producers of knowledge and as auditors of technology research and development.

Slide-3

With its extensive network of partners, CS3 unites diverse research communities through a convergent research model that delivers innovations across five engineering and scientific areas:

Research Thrusts

- Wi-Edge: Current 5G & 6G bandwidth, latency, and density concentrations

- Situational Awareness: High-accuracy recognition & tracking across fields of view from sensors in complex, dynamic scenes.

- Security, Privacy, & Fairness: Emerging technologies pose new challenges that may compromise social equity.

- Public Interest Technology: Technology can intentionally or unintentionally produce or reproduce social harms, amplified for data collection in underserved communities.

- Streetscape Applications: Cross-layer coordination and optimization, human-streetscape interaction, generalizability, and future streetscape design.

Slide-4

Student Leadership Council & AI for Smart Cities Team

Student Leadership Council: The Student Leadership Council (SLC) serves as a liaison between the CS3 student body and the center leadership, representing both the undergraduate and graduate levels across all core partner institutions. Its goal is to support open communication, provide leadership development, and create opportunities for collaboration.

Research Thrusts & AI for Smart Cities: Wi-Edge, Streetscape Applications, Situational Awareness

- Fixed Sensing with Cameras (Emily)

- Tracking with LiDAR Sensors (Jennifer)

- Scooter & Motor Vehicle Sensing (Ethan)

- Simulation Environments (Abigail)

- 3D Data Visualization (Ariana)

Slide-5

Future of Streetscapes with CS3

Machine Vision Helping Humanity in Urban Environments

- New opportunity: AI camera intelligence can detect objects in real-time

- This helps us understand human behavior and public safety

Slide-6

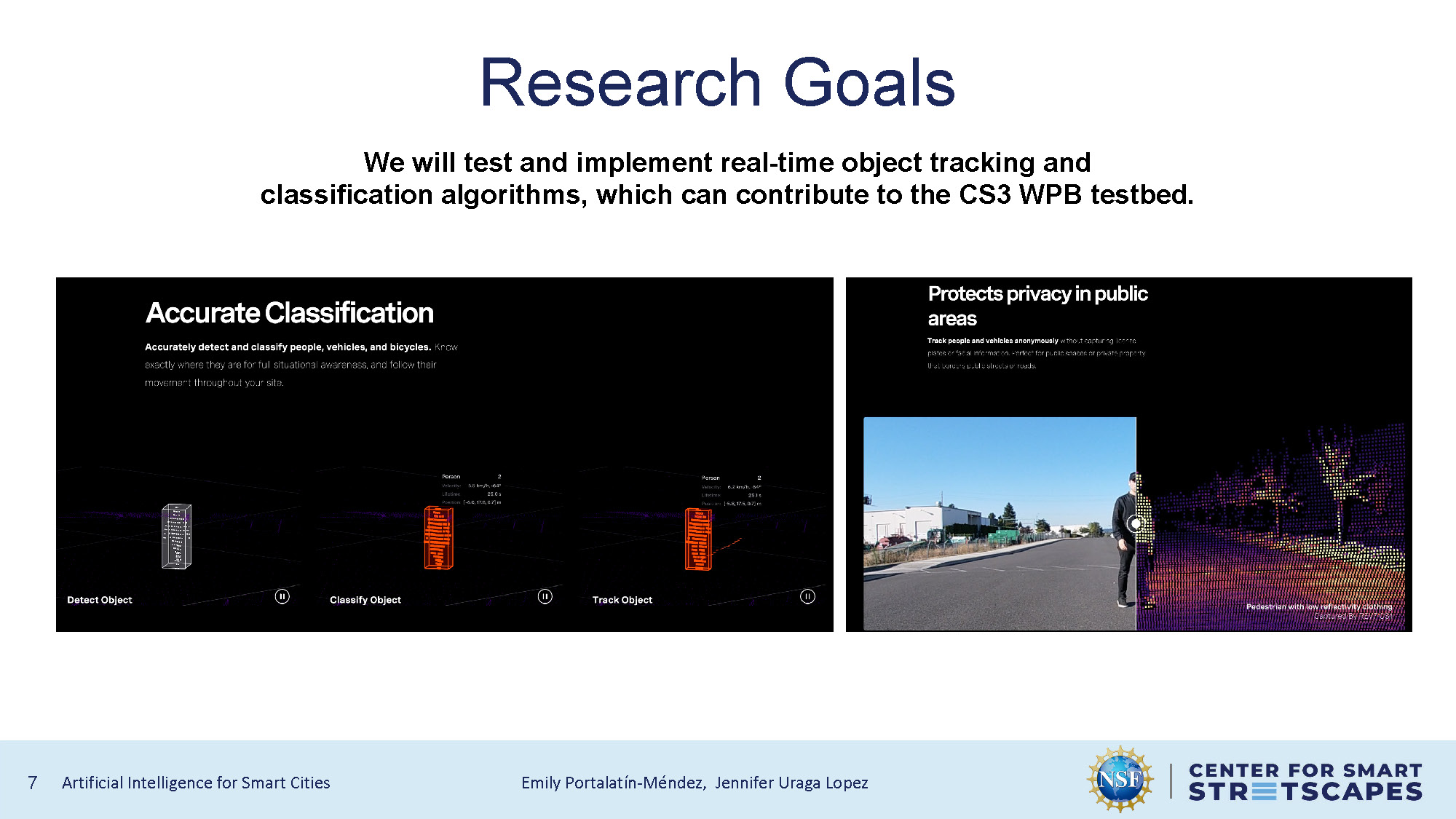

Research Goals

We will test and implement real-time object tracking and classification algorithms, which can contribute to the CS3 WPB testbed.

Slide-7

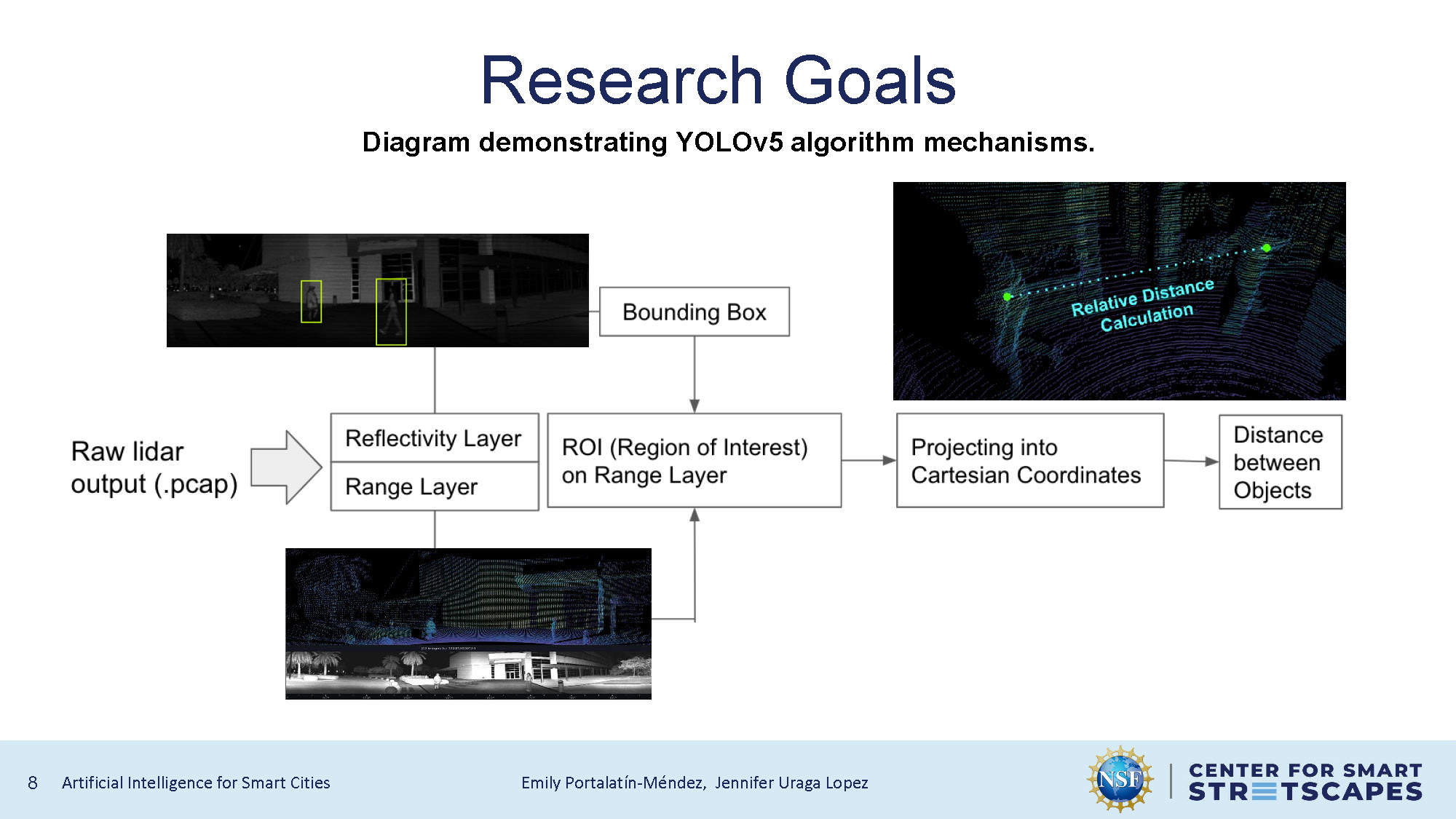

Research Goals

Diagram demonstrating YOLOv5 algorithm mechanisms.

The diagram shows a sequence of labeled boxes: Raw lidar output (.pcap) → Reflectivity Layer / Range Layer → ROI (Region of Interest) → Projecting into Cartesian Coordinates → Distance between Objects. A Bounding Box label connects to the ROI box.

Images include: a grayscale scene with two yellow bounding boxes, a combined lidar and grayscale street/building view, and a 3D point cloud with the label "Relative Distance Calculation."

Slide-8

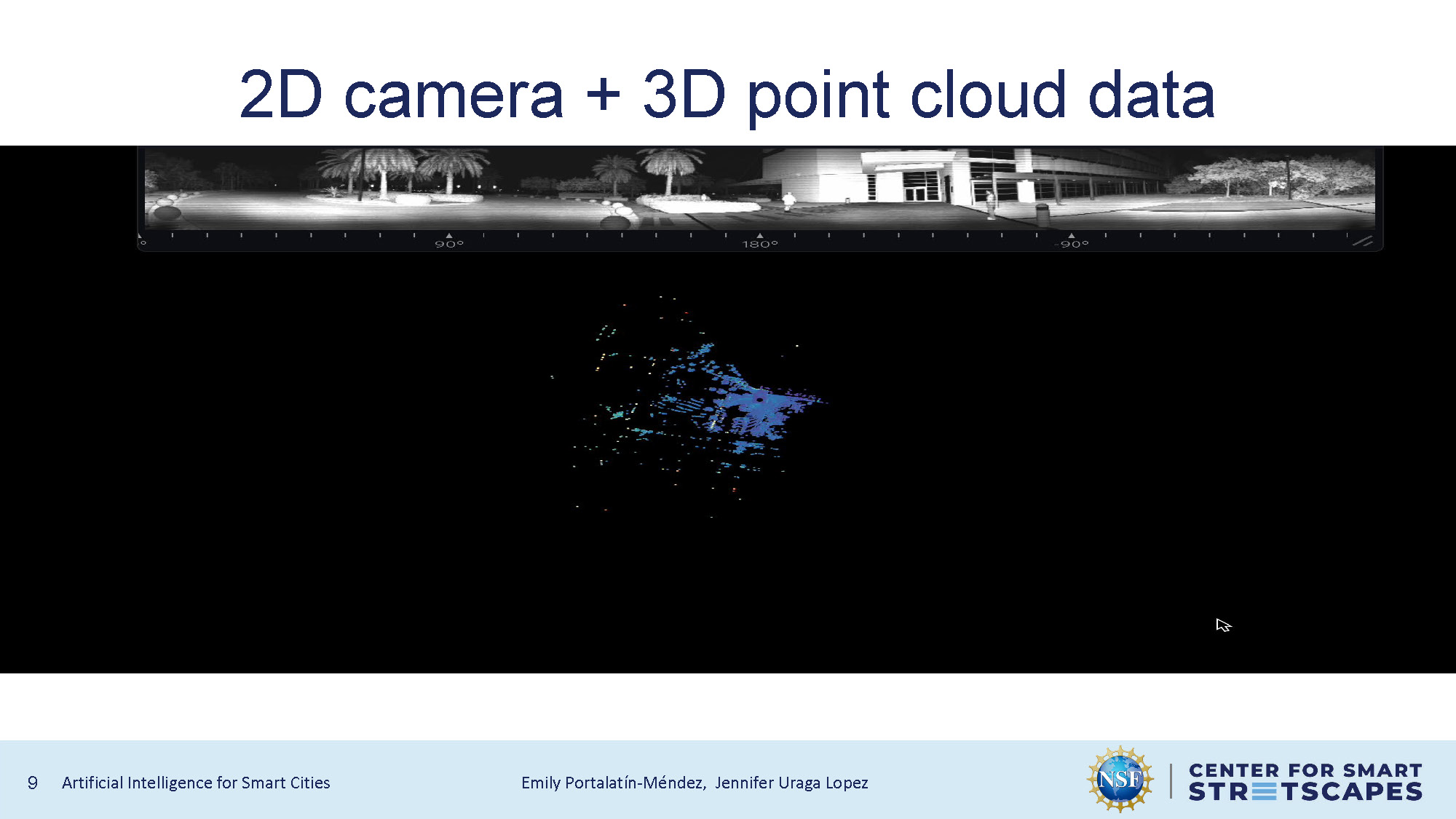

2D camera + 3D point cloud data

Visual representation of how 2D camera data is combined with 3D point cloud information for enhanced spatial awareness and object detection.

Slide 9

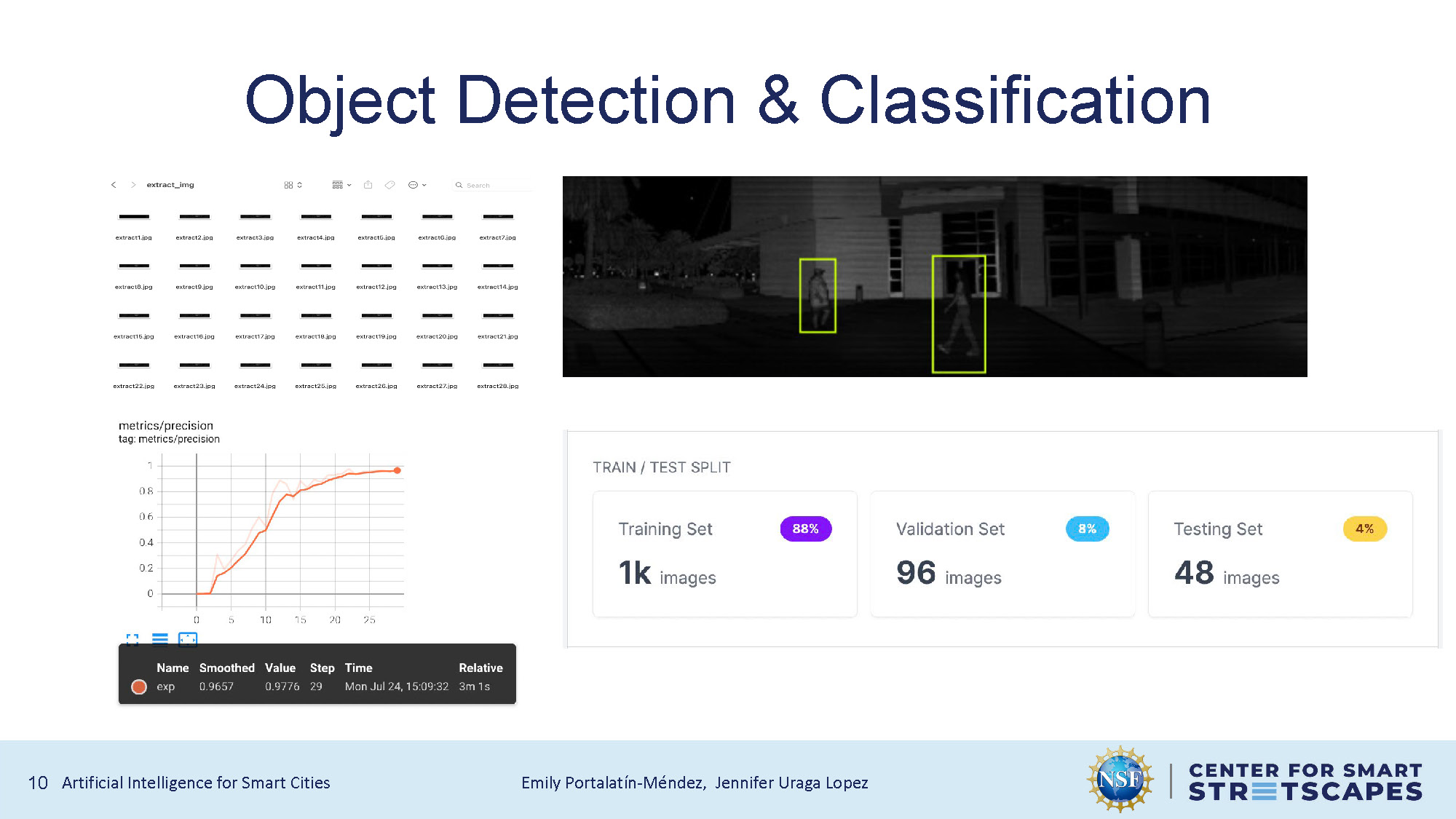

Object Detection & Classification

The slide contains four main sections:

- A grid of small thumbnail images with filenames.

- A grayscale street image with two figures highlighted by yellow bounding boxes.

- A line graph labeled "metrics/precision" showing values rising over steps, with details in a small black box below the chart.

- A "TRAIN / TEST SPLIT" box showing: Training Set (1k images, 88%), Validation Set (96 images, 8%), and Testing Set (48 images, 4%).

Slide 10

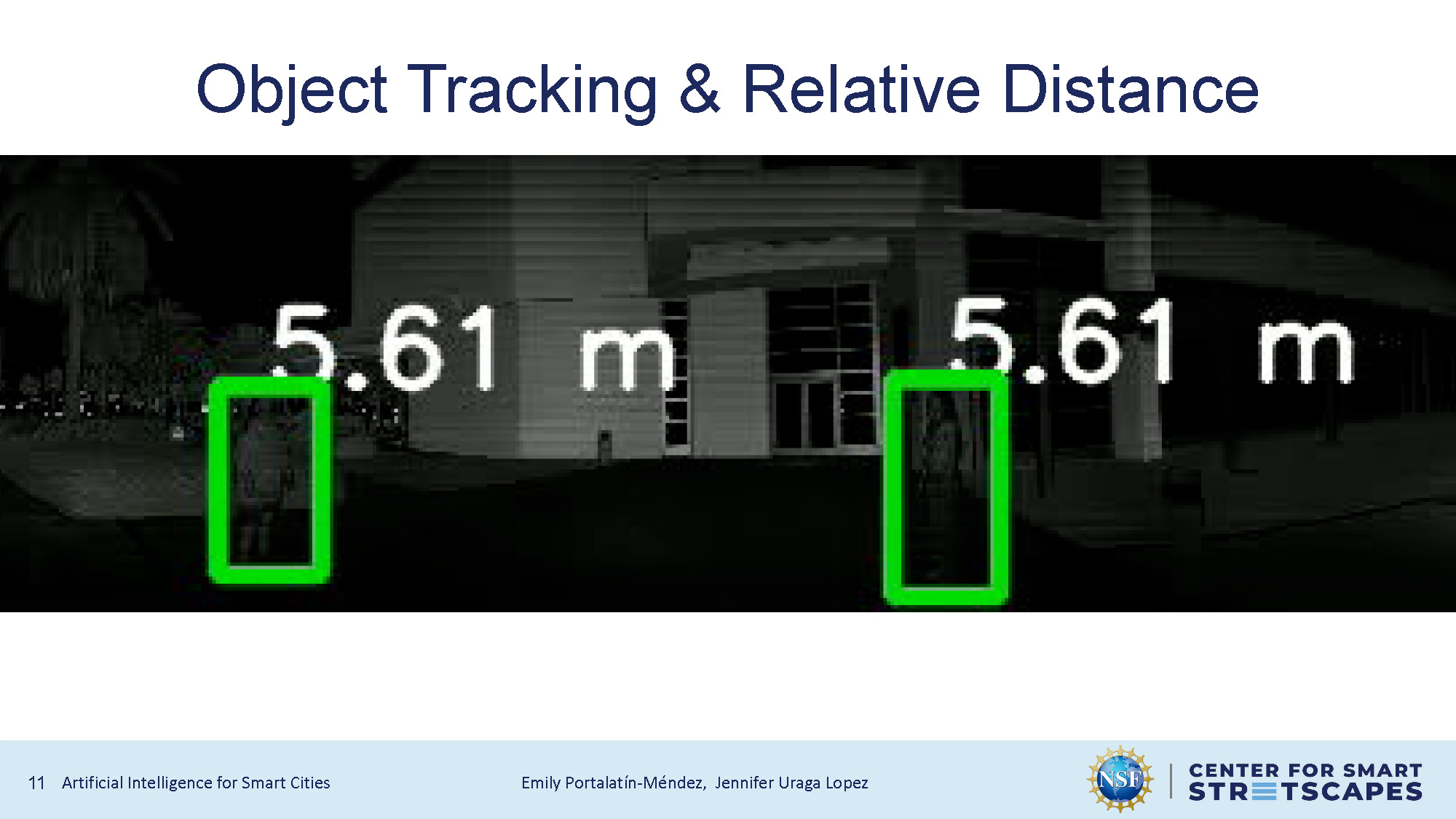

Object Tracking & Relative Distance

Demonstration of object tracking capabilities and relative distance measurement using combined 2D and 3D data.

Slide-11

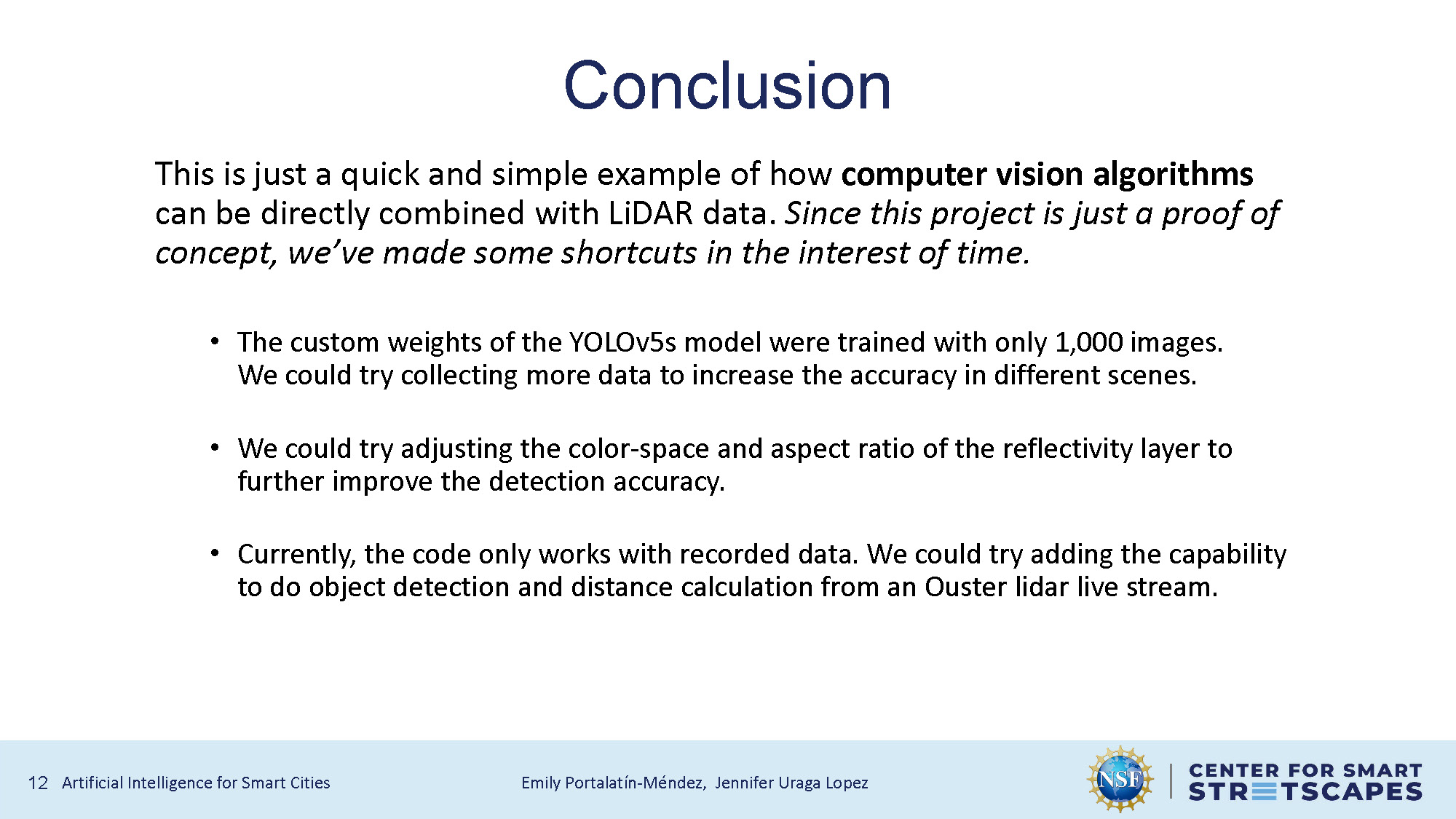

Conclusion

This is just a quick and simple example of how computer vision algorithms can be directly combined with LiDAR data. Since this project is just a proof of concept, we've made some shortcuts in the interest of time.

- The custom weights of the YOLOv5s model were trained with only 1,000 images. We could try collecting more data to increase the accuracy in different scenes.

- We could try adjusting the color-space and aspect ratio of the reflectivity layer to further improve the detection accuracy.

- Currently, the code only works with recorded data. We could try adding the capability to do object detection and distance calculation from an Ouster lidar live stream.

Slide 12

Questions & Feedback

End of Presentation

Click the right arrow to return to the beginning of the slide show.

For a downloadable version of this presentation, email: I-SENSE@FAU.