Deep Learning Models for Human Activity Recognition Using Wearable Body Sensors

Slide-1

Shelly Davidashvilly

Project Supervisor: Dr. Behnaz Ghoraani

FAU ISENSE - REU Summer 2021

Slide-2

The objective of the project is to develop deep learning models capable of classifying labeled motion data with high accuracy and high generalizability to the Parkinson's population.

Slide-3

Human activity recognition (HAR) is the challenge of utilizing machine learning algorithms to predict an individual's activity.

This project focuses on HAR through wearable body sensors.

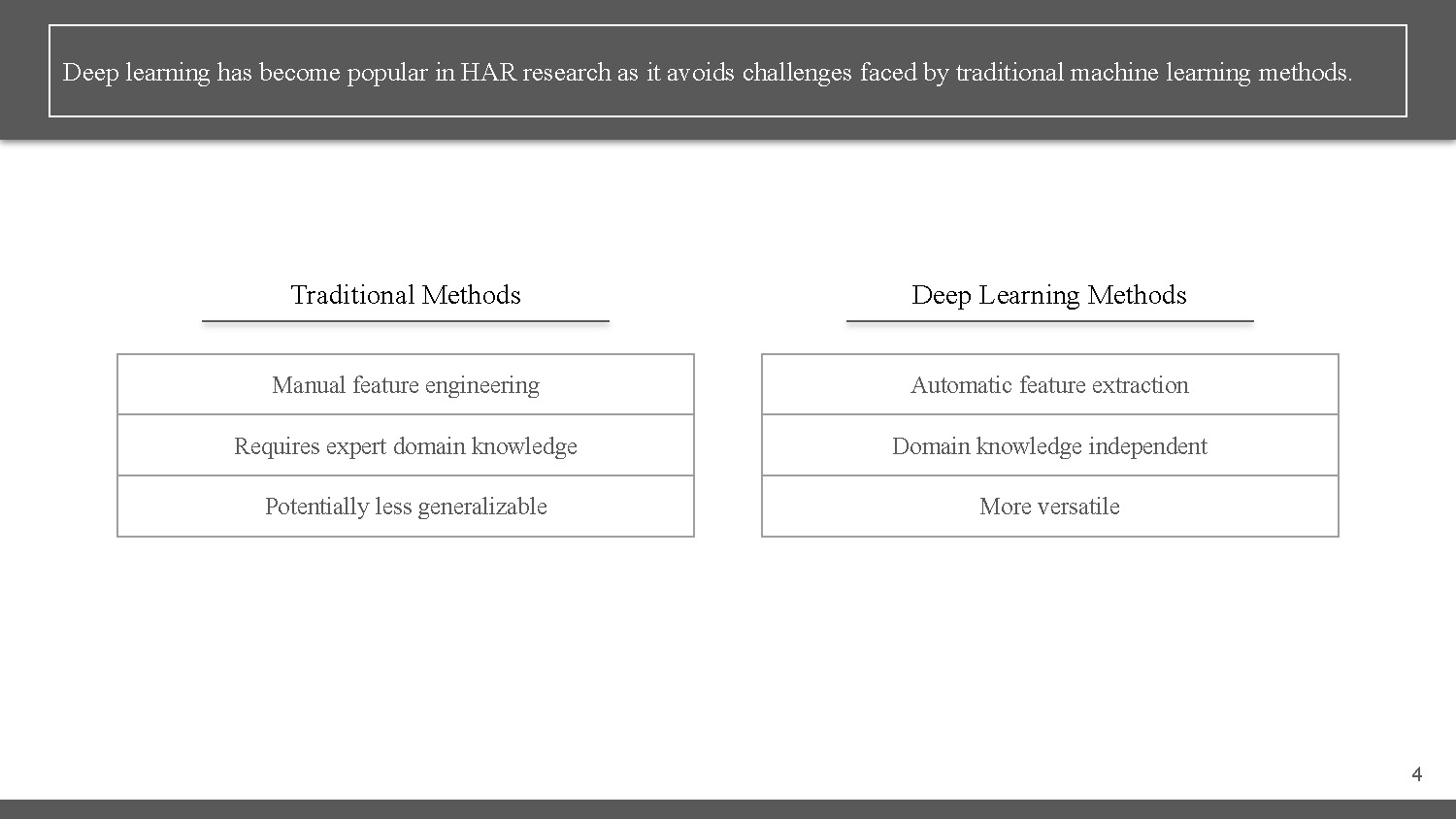

Slide-4

Deep learning has become popular in HAR research as it avoids challenges faced by traditional machine learning methods.

| Traditional Methods | Deep Learning Methods |

|---|---|

| Manual feature engineering Requires expert domain knowledge Potentially less generalizable |

Automatic feature extraction Domain knowledge independent More versatile |

Slide-5

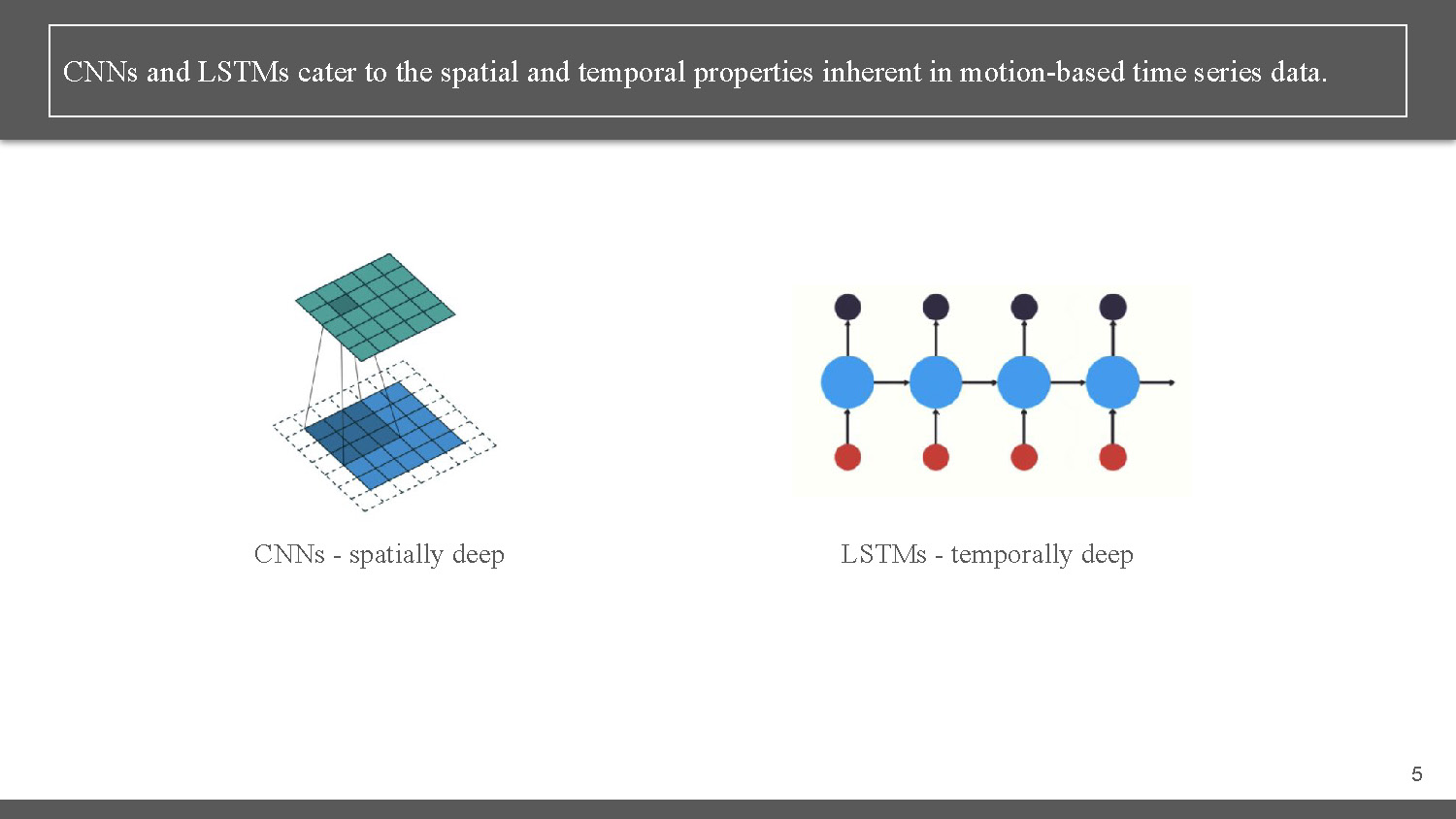

CNNs and LSTMs cater to the spatial and temporal properties inherent in motion-based time series data.

CNNs - spatially deep LSTMs - temporally deep

Slide-6

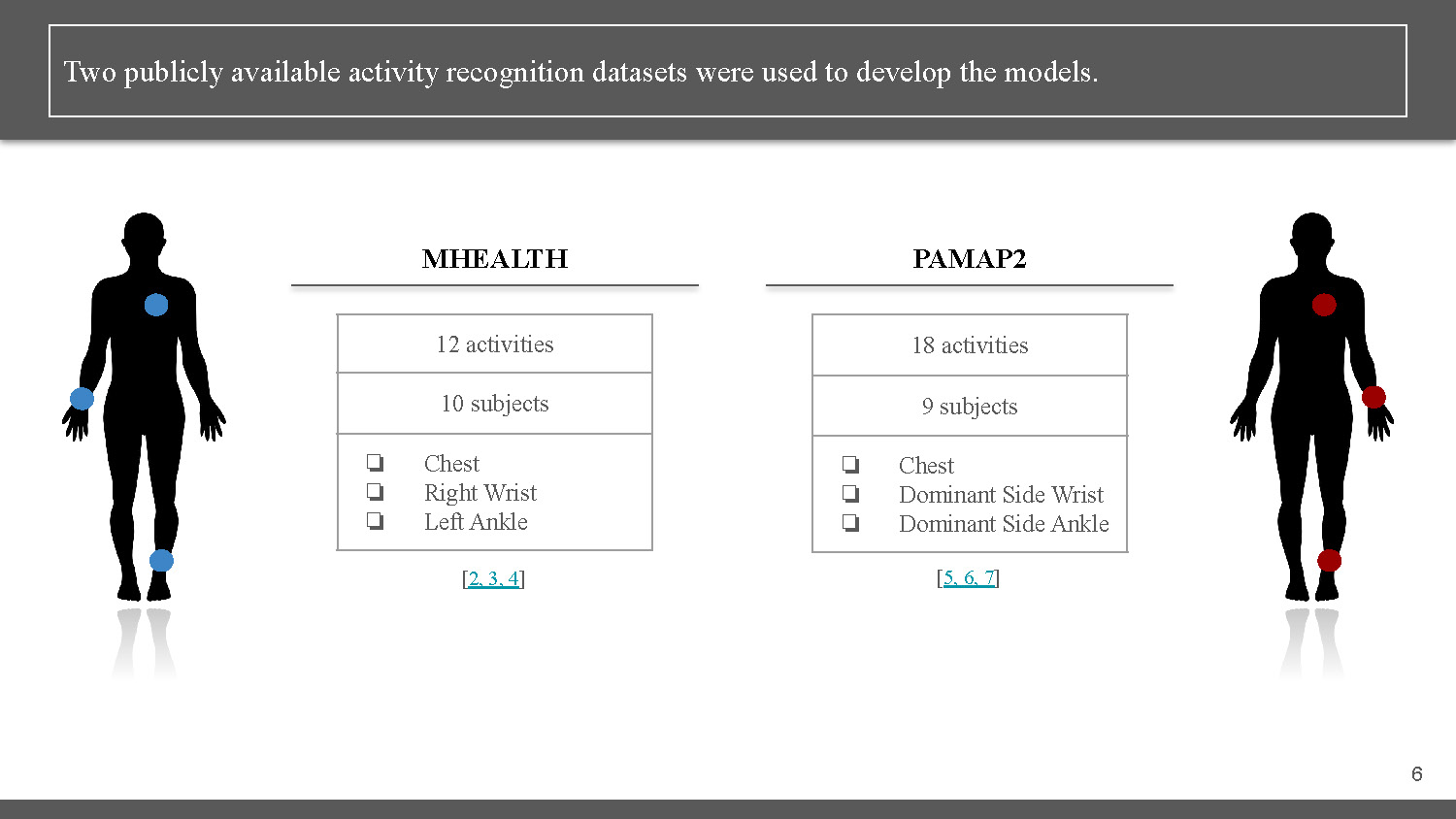

Two publicly available activity recognition datasets were used to develop the models.

| MHEALTH | PAMAP2 |

|---|---|

| 12 activities 10 subjects ❏ Chest ❏ Right Wrist ❏ Left Ankle |

18 activities 9 subjects ❏ Chest ❏ Dominant Side Wrist ❏ Dominant Side Ankle |

Slide-7

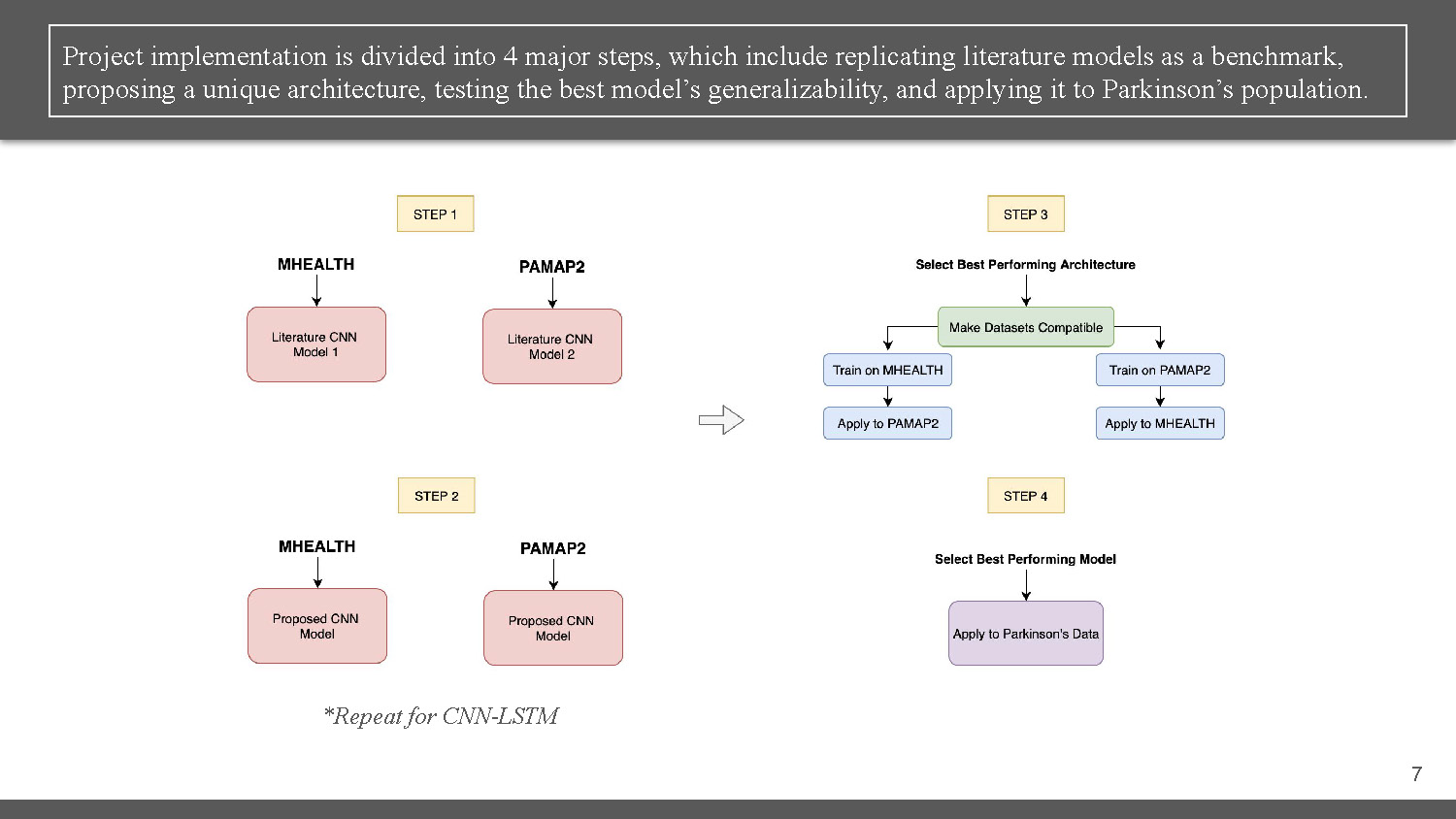

Project implementation is divided into 4 major steps, which include replicating literature models as a benchmark, proposing a unique architecture, testing the best model's generalizability, and applying it to Parkinson's population.

The slide displays a flowchart with four steps, labeled "STEP 1," "STEP 2," "STEP 3," and "STEP 4." Each step has a title and a series of interconnected boxes representing different processes. Step 1 shows two pink boxes, "Literature CNN Model 1" and "Literature CNN Model 2," under the headings "MHEALTH" and "PAMAP2," respectively. Step 2 shows "Proposed CNN Model" under the same two headings. Step 3 shows a flow with "Make Datasets Compatible" leading to two branches: "Train on MHEALTH" and "Train on PAMAP2," which then lead to "Apply to PAMAP2" and "Apply to MHEALTH." Step 4 shows "Apply to Parkinson’s Data." Below the flowchart, in italics, is the text "*Repeat for CNN-LSTM."

Slide-8

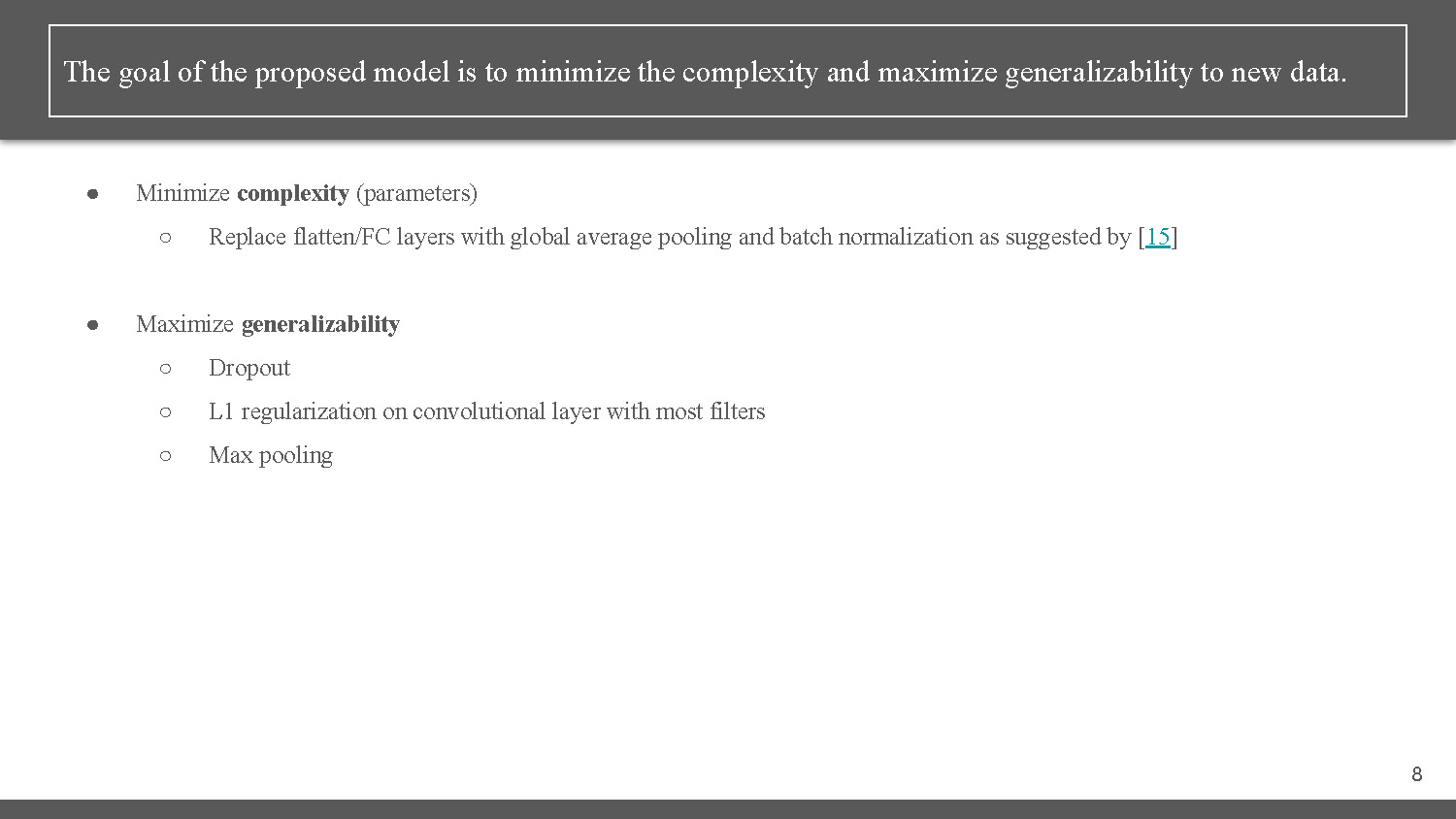

The goal of the proposed model is to minimize the complexity and maximize generalizability to new data.

● Minimize complexity (parameters)

○ Replace flatten/FC layers with global average pooling and batch normalization as suggested by [15]

● Maximize generalizability

○ Dropout

○ L1 regularization on convolutional layer with most filters

○ Max pooling

Slide-9

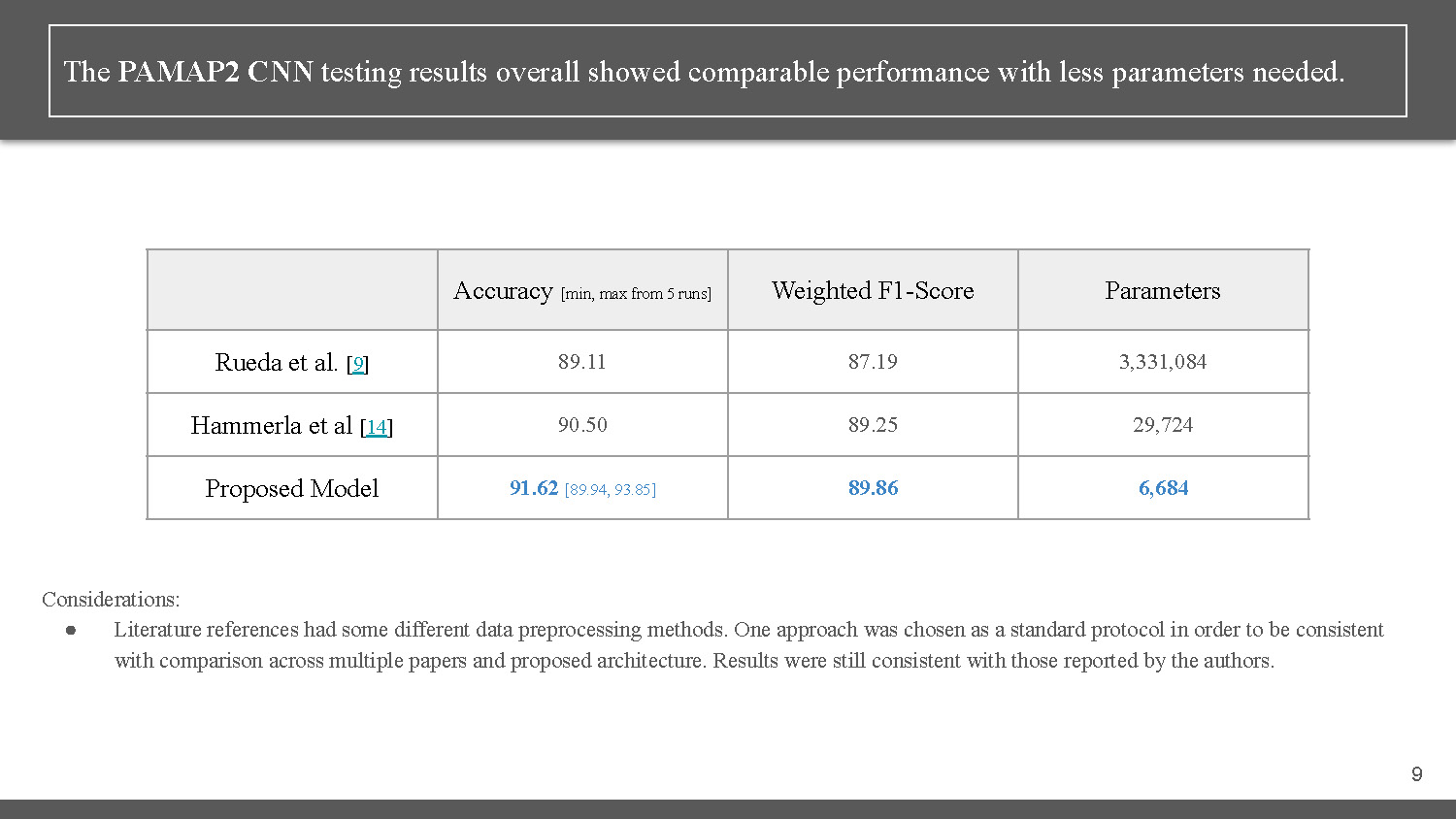

The PAMAP2 CNN testing results overall showed comparable performance with less parameters needed.

| Accuracy [min, max from 5 runs] | Weighted F1-Score | Parameters | |

|---|---|---|---|

| Rueda et al. [9] | 89.11 | 87.19 | 3,331,084 |

| Hammerla et al [14] | 90.50 | 89.25 | 29,724 |

| Proposed Model | 91.62 [89.94, 93.85] | 89.86 | 6,684 |

Considerations:

● Literature references had some different data preprocessing methods. One approach was chosen as a standard protocol in order to be consistent with comparison across multiple papers and proposed architecture. Results were still consistent with those reported by the authors.

Slide-10

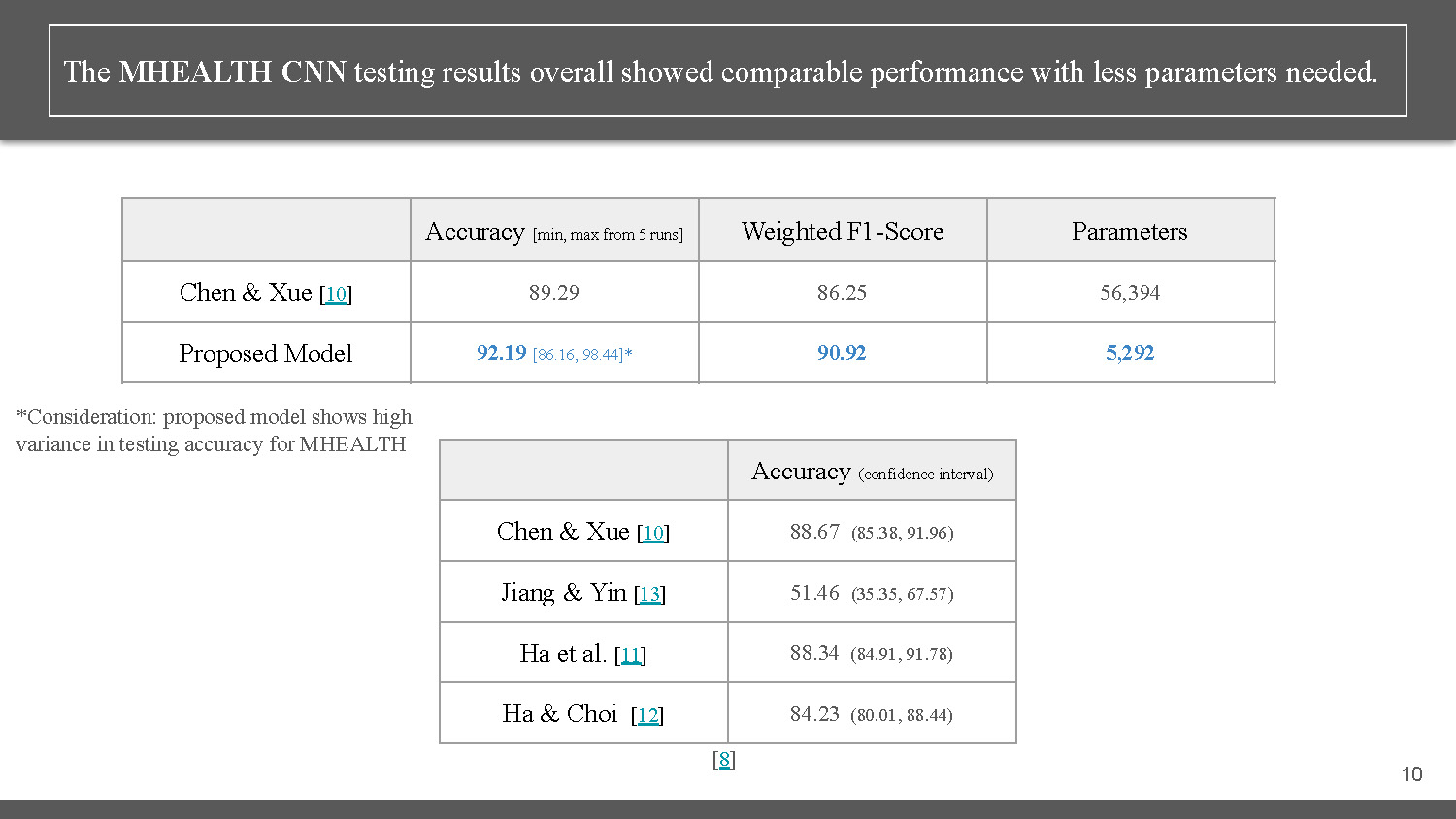

The MHEALTH CNN testing results overall showed comparable performance with less parameters needed.

| Accuracy [min, max from 5 runs] | Weighted F1-Score | Parameters | |

|---|---|---|---|

| Chen & Xue [10] | 89.29 | 86.25 | 56,394 |

| Proposed Model | 92.19 [86.16, 98.44]* | 90.92 | 5,292 |

*Consideration: proposed model shows high variance in testing accuracy for MHEALTH

| Accuracy (confidence interval) | |

|---|---|

| Chen & Xue [10] | 88.67 (85.38, 91.96) |

| Jiang & Yin [13] | 51.46 (35.35, 67.57) |

| Ha et al. [11] | 88.34 (84.91, 91.78) |

| Ha & Choi [12] | 84.23 (80.01, 88.44) |

Slide-11

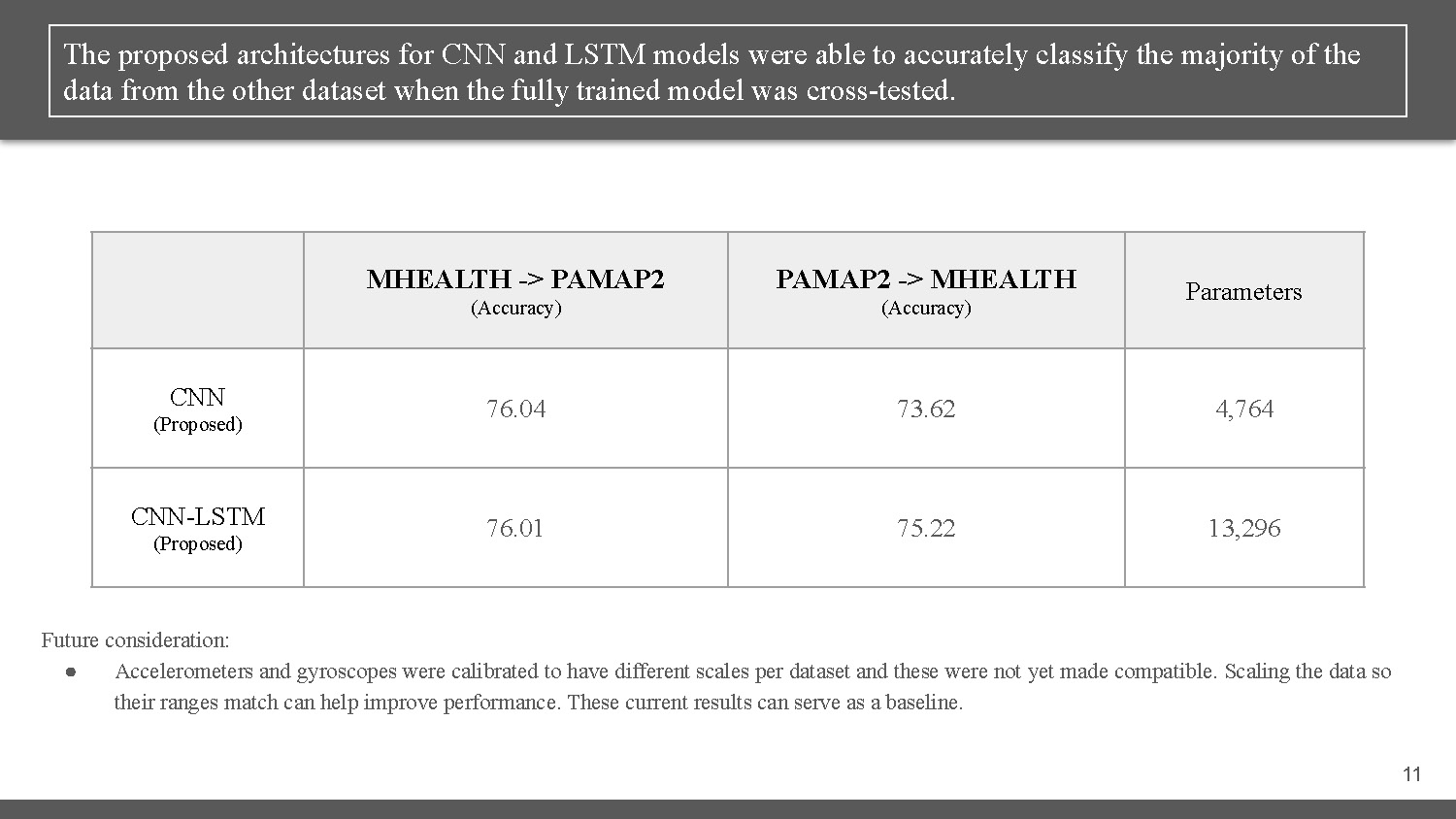

The proposed architectures for CNN and LSTM models were able to accurately classify the majority of the data from the other dataset when the fully trained model was cross-tested.

| MHEALTH -> PAMAP2 (Accuracy) |

PAMAP2 -> MHEALTH (Accuracy) |

Parameters | |

|---|---|---|---|

| CNN (Proposed) |

76.04 | 73.62 | 4,764 |

| CNN-LSTM (Proposed) |

76.01 | 75.22 | 13,296 |

Future consideration:

● Accelerometers and gyroscopes were calibrated to have different scales per dataset and these were not yet made compatible. Scaling the data so their ranges match can help improve performance. These current results can serve as a baseline.

Slide-12

In summary, the proposed CNN architecture showed comparable performance to various literature results with less parameters needed. When proposed CNN and CNN-LSTM models were cross applied on both datasets, they showed good generalizability with more room for improvement.

Slide-13

Final Remarks

Future Work (to be continued through DIS):

● Evaluate CNN-LSTM hybrid models from literature for comparison to proposed CNN-LSTM

● Further evaluate papers to explore more model results and ideas

● Apply to Parkinson's patients data

Slide-14

References

1. https://orestibanos.com/datasets.htm

2. http://archive.ics.uci.edu/ml/datasets/mhealth+dataset

3. Banos, O., Garcia, R., Holgado, J. A., Damas, M., Pomares, H., Rojas, I., Saez, A., Villalonga, C. mHealthDroid: a novel framework for agile development of mobile health applications. Proceedings of the 6th International Work-conference on Ambient Assisted Living an Active Ageing (IWAAL 2014), Belfast, Northern Ireland, December 2-5, (2014).

4. Banos, O., Villalonga, C., Garcia, R., Saez, A., Damas, M., Holgado, J. A., Lee, S., Pomares, H., Rojas, I. Design, implementation and validation of a novel open framework for agile development of mobile health applications. BioMedical Engineering OnLine, vol. 14, no. S2:S6, pp. 1-20 (2015).

5. https://archive.ics.uci.edu/ml/datasets/pamap2+physical+activity+monitoring

6. A. Reiss and D. Stricker. Introducing a New Benchmarked Dataset for Activity Monitoring. The 16th IEEE International Symposium on Wearable Computers (ISWC), 2012.

7. A. Reiss and D. Stricker. Creating and Benchmarking a New Dataset for Physical Activity Monitoring. The 5th Workshop on Affect and Behaviour Related Assistance (ABRA), 2012.

8. Artur Jordao, Antonio Carlos Nazare, Jessica Sena, William Robson Schwartz. Human Activity Recognition Based on Wearable Sensor Data: A Standardization of the State-of-the-Art

9. Fernando Moya Rueda, René Grzeszick, Gernot A. Fink, Sascha Feldhorst, Michael ten Hompel. Convolutional Neural Networks for Human Activity Recognition Using Body-Worn Sensors

10. Yuqing Chen and Yang Xue, "A Deep Learning Approach to Human Activity Recognition Based on Single Accelerometer," in IEEE International Conference on Systems, Man, and Cybernetics, 2015, pp. 1488–1492

11. Sojeong Ha, Jeong-Min Yun, and Seungjin Choi, "Multi-modal convolutional neural networks for activity recognition," in IEEE International Conference on Systems, Man, and Cybernetics, 2015, pp. 3017–3022

12. Sojeong Ha and Seungjin Choi, "Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors," in International Joint Conference on Neural Networks, 2016, pp. 381–388.

13. Wenchao Jiang and Zhaozheng Yin, "Human activity recognition using wearable sensors by deep convolutional neural networks," in 23rd Annual Conference on Multimedia Conference, 2015, pp. 1307–1310.

14. Nils Y. Hammerla, Shane Halloran, Thomas Plotz. Deep, Convolutional, and Recurrent Models for Human Activity Recognition using Wearables

15. Kun Xia, Jianguang Huang, Hanyu Wang. LSTM-CNN Architecture for Human Activity Recognition

Thank you!

End of Presentation

Click the right arrow to return to the beginning of the slide show.

For a downloadable version of this presentation, email: I-SENSE@FAU.