Detecting Emotions in Multi-Party Conversations

Slide-1

By Jacob Belga, Hannah Gibilisco, and Serena Walker

Slide-2

Jacob Belga: Introduction

- University of Central Florida (UCF), Class of 2020

- Major: Computer Science

- Future Goals: Research in HCI and a Ph.D. in CS

- Previous Research: Emotion Recognition

Slide-3

Hannah Gibilisco: Introduction

- Stetson University 2017–2021, Computer Science

- Summer Programs: Android Development at UCF (2016), Cybersecurity at Stetson (2017), REU in Computational Neuroscience at FAU (2018)

- Clubs: Programming team, ACM, Hackerspace

Slide-4

Serena Walker: Introduction

- Attending REU at FAU; Research on Human Computer Interaction

- On E-Board of Lean In

- TA for Intro to Programming

Slide-5

Mental Health Crisis

- 200 million work days lost annually, cost $17–$43.7 billion

- Annual healthcare costs exceed $57 billion

- Tied with cancer as third most costly condition in US

- WHO ranked mental health as third leading contributor to global disease burden (2004); projected to be leading by 2030

Slide-6

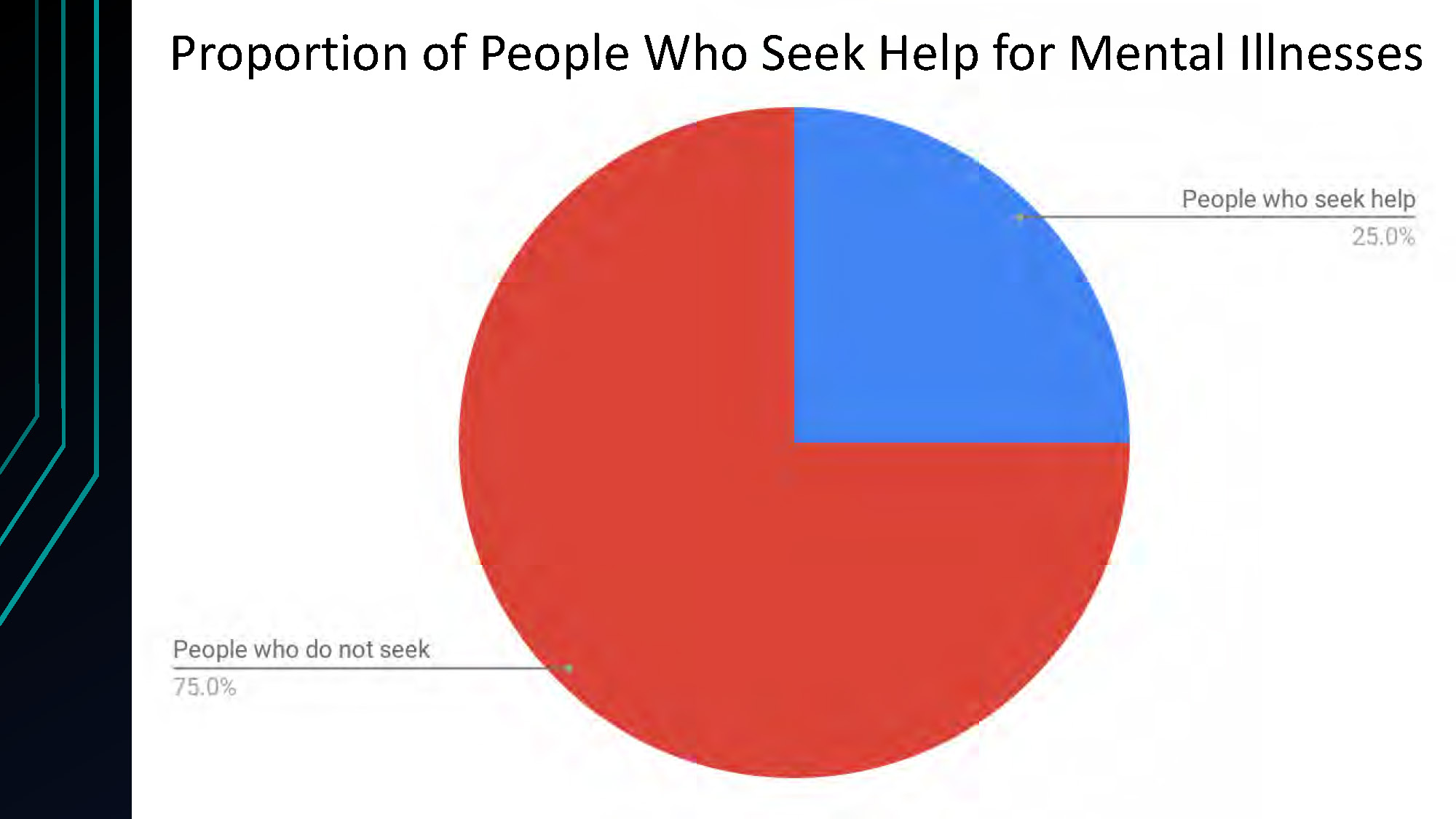

Proportion of People Who Seek Help

Pie chart showing 75% People who do not seek; 25% People who seek help

Slide-7

Why? Asynchronous Relationships

Slide-8

How can we solve this?

Slide-9

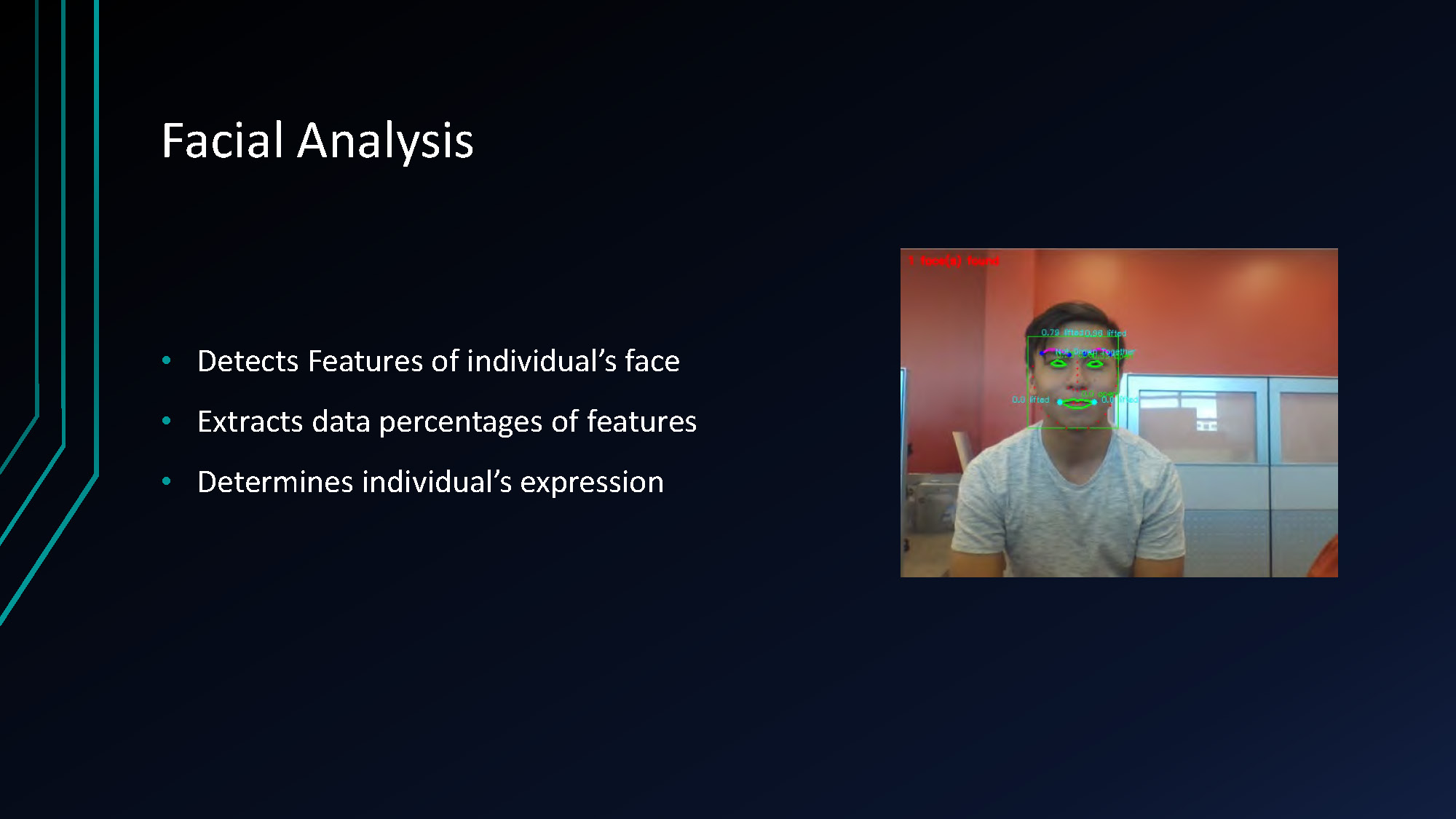

Facial Analysis

- Detects features of face

- Extracts data percentages

- Determines expression

Slide-10

Sentiment Analysis

- Displays transcript of sentiments

- Assigns positivity rating

- Calculates speech speed

Slide-11

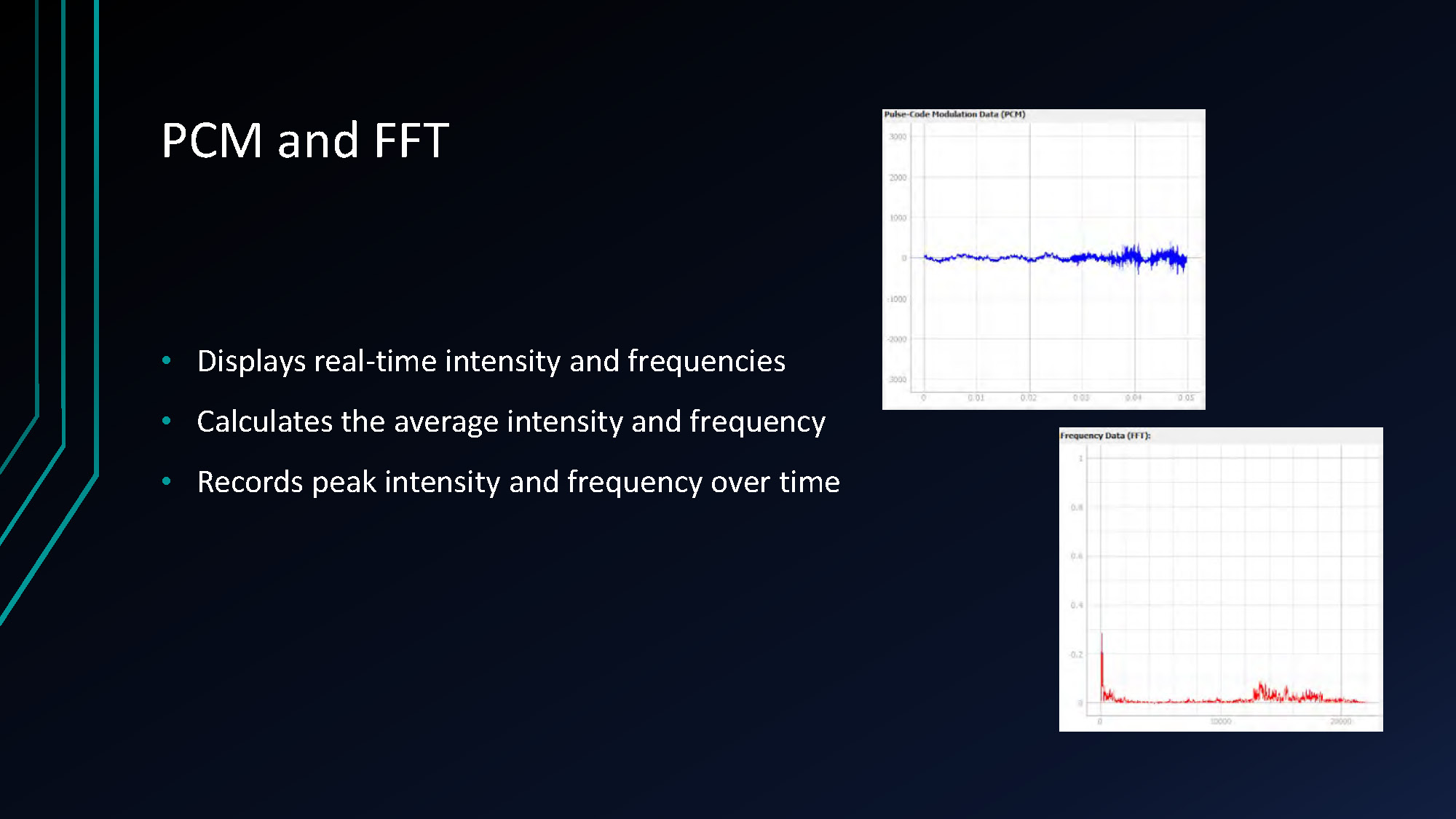

PCM and FFT

- Displays real-time intensity and frequencies

- Calculates averages

- Records peak intensity and frequency

Slide-12

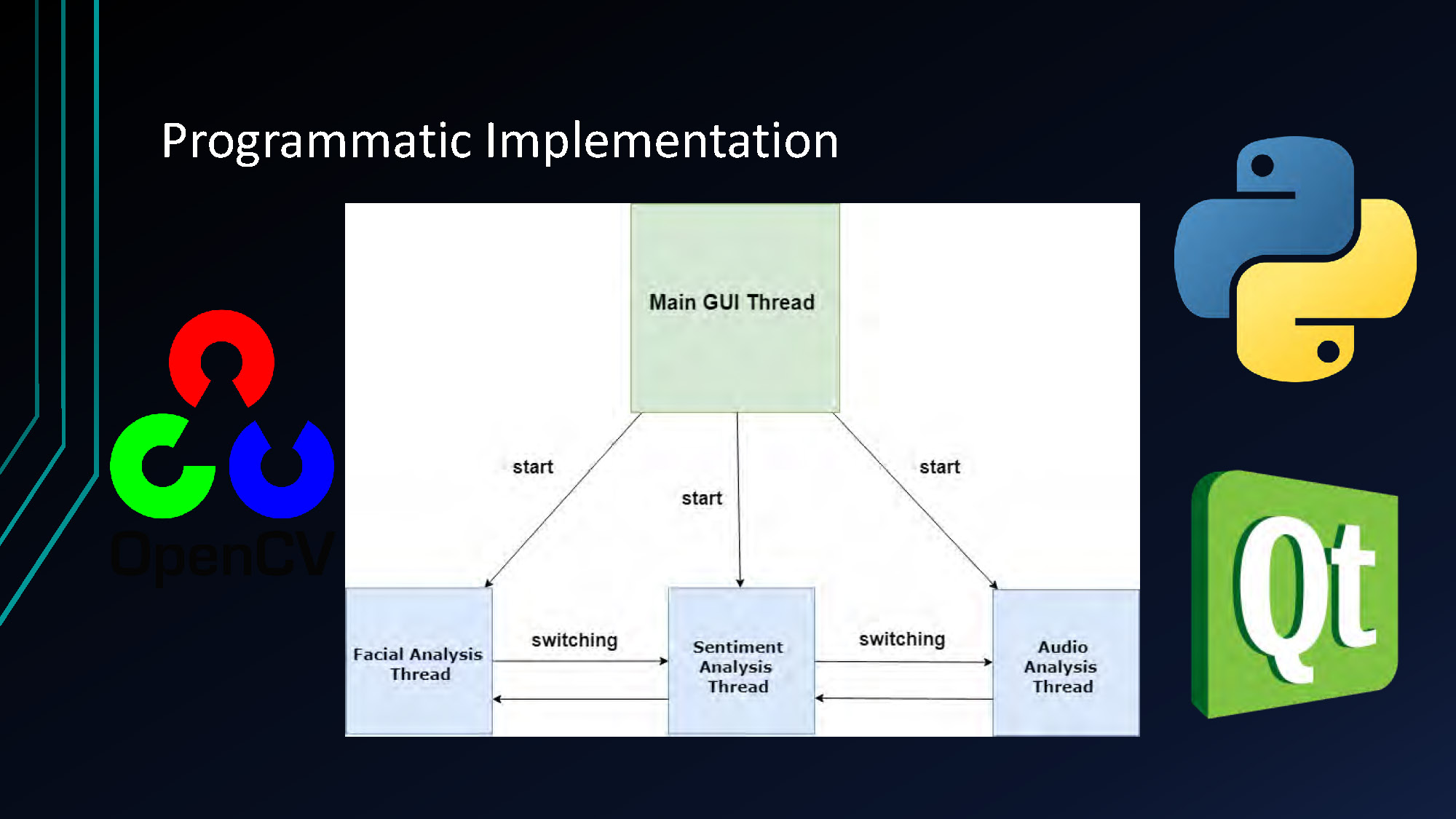

Programmatic Implementation

On the left is the red, green, and blue logo for OpenCV. On the right are the logos for Python and Qt. In the center is a diagram with a green "Main GUI Thread" box at the top, connected to three blue boxes below: "Facial Analysis Thread", "Sentiment Analysis Thread", and "Audio Analysis Thread". Arrows labeled "start" point from the main thread to each of the three lower threads. Arrows labeled "switching" point between the facial analysis, sentiment analysis, and audio analysis threads.

Slide-13

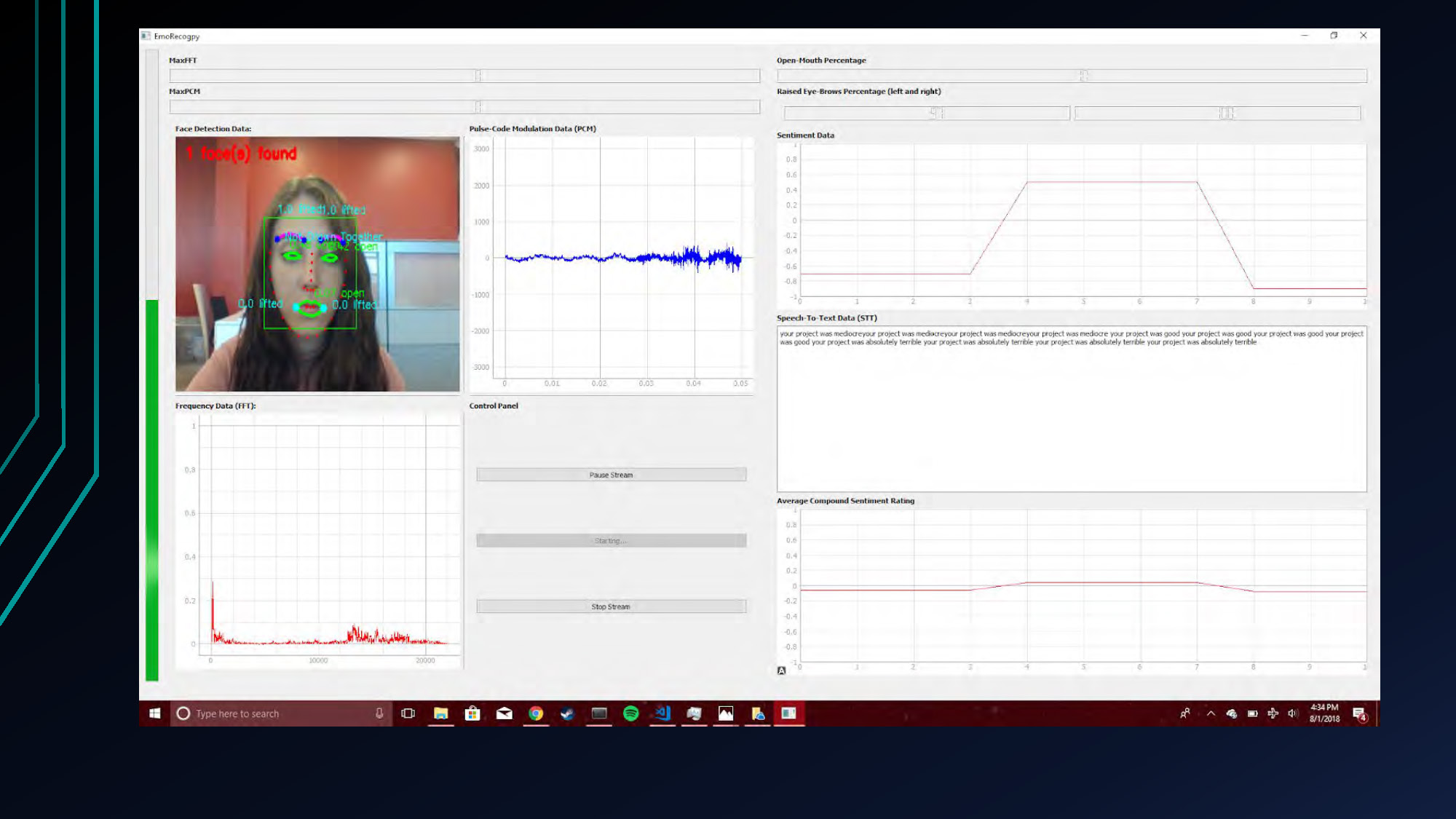

A screenshot of a computer desktop showing an application interface. The application window is titled "EmoDpy". The interface displays multiple panels. The top-left panel shows a webcam feed with a person's face. The face is highlighted with green bounding boxes and lines. Text in this panel reads "Face Detection Stats:" and "3 face(s) found". To the right of the webcam feed are several graphs and data displays, including "Pulse Code Modulation Data (PCM)", "Sentiment Data", "Frequency Data (FFT)", and "Average Compound Sentiment Rating". Below the webcam panel is a "Control Panel" with "Pause Stream" and "Stop Stream" buttons. There's also a text box labeled "Speech To Text Data (STT)" filled with a sample text.

Slide-14

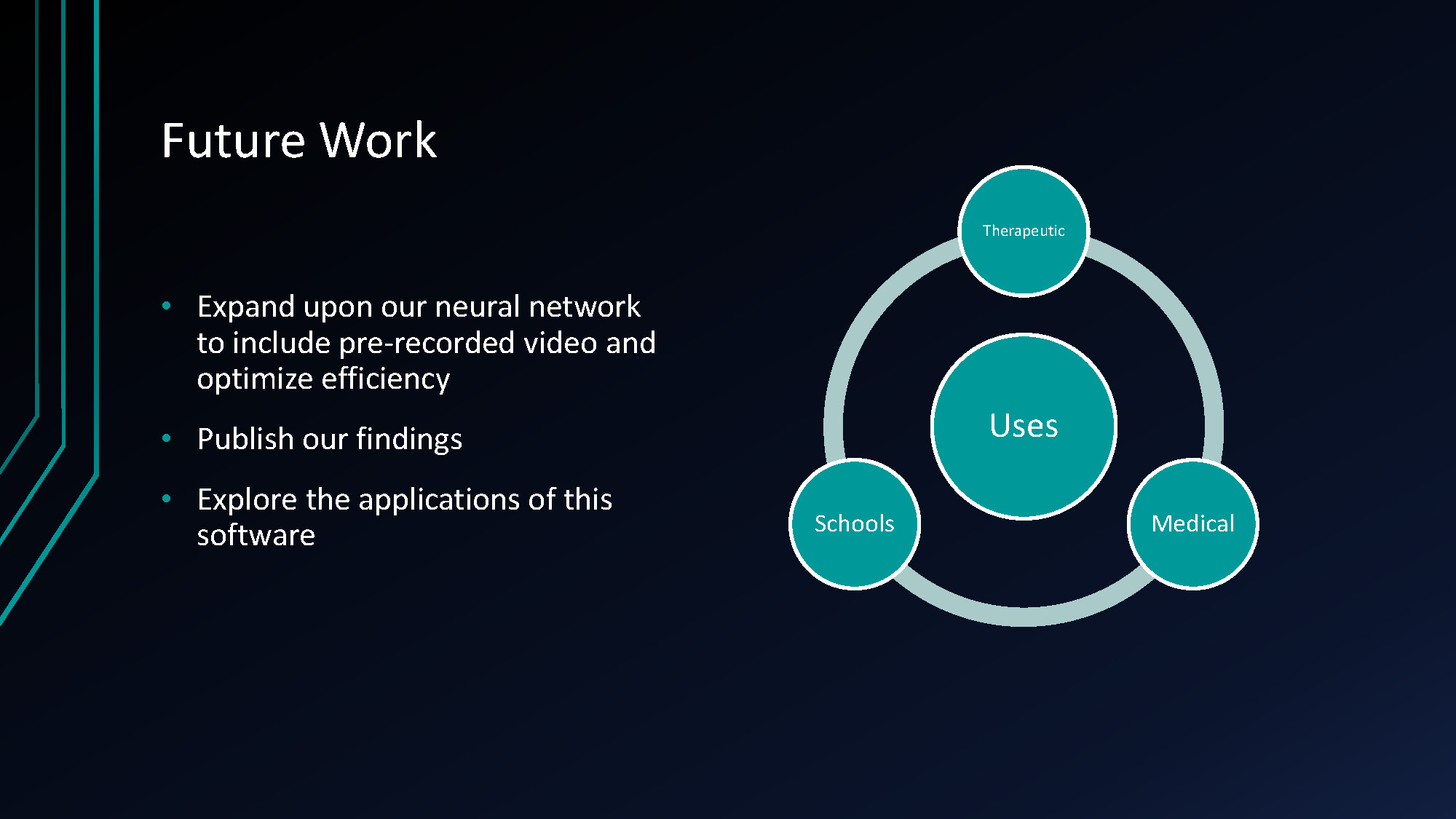

Future Work

- Expand neural network to include pre-recorded video and optimize efficiency

- Publish findings

- Explore applications in therapeutic and medical fields

Slide-15

So what?

"The whole is greater than the sum of its parts." - Aristotle

Slide-16

Questions?

End of Presentation

Click the right arrow to return to the beginning of the slide show.

For a downloadable version of this presentation, email: I-SENSE@FAU.